AI GPT, or Artificial Intelligence Generative Pre-trained Transformer, is a powerful language model that has revolutionized various applications in natural language processing. In this comprehensive guide, we will explore the fundamentals of AI GPT, its training process, fine-tuning techniques, challenges and limitations, and best practices for optimal performance. By the end of this guide, you will have a solid understanding of AI GPT and how to unlock its full potential.

Key Takeaways

- AI GPT is a powerful language model that has transformed natural language processing tasks.

- The training process of AI GPT involves data collection and preprocessing, model architecture, and training techniques.

- Fine-tuning AI GPT allows for transfer learning, domain adaptation, and hyperparameter tuning.

- Challenges and limitations of AI GPT include ethical considerations, bias and fairness, and security and privacy concerns.

- Best practices for AI GPT include ensuring data quality and diversity, human oversight and control, and continuous improvement.

Understanding AI GPT

What is AI GPT?

AI GPT, which stands for Artificial Intelligence Generative Pre-trained Transformer, is a state-of-the-art language model developed by OpenAI. It is designed to generate human-like text based on the input it receives. AI GPT has been trained on a vast amount of data from the internet, allowing it to learn patterns and structures of language. This enables it to generate coherent and contextually relevant responses to prompts or questions.

How does AI GPT work?

AI GPT, or Artificial Intelligence Generative Pre-trained Transformer, is a state-of-the-art language model that utilizes deep learning techniques to generate human-like text. It is based on the Transformer architecture, which allows it to process and understand the context of words and sentences. AI GPT works by training on a large corpus of text data, such as books, articles, and websites, to learn the statistical patterns and relationships between words. This enables it to generate coherent and contextually relevant text based on a given prompt or input.

One of the key components of AI GPT is its attention mechanism, which allows it to focus on different parts of the input text when generating output. This attention mechanism helps AI GPT capture long-range dependencies and understand the context of the text. Additionally, AI GPT uses a decoding algorithm called beam search to generate multiple possible outputs and select the most likely one based on a scoring function.

To summarize, AI GPT works by leveraging deep learning techniques, training on a large corpus of text data, and utilizing the Transformer architecture with attention mechanisms and beam search decoding.

Applications of AI GPT

AI GPT has a wide range of applications across various industries. It is particularly popular in the marketing domain, where it is used for tasks such as content generation, customer segmentation, and personalized recommendations. In the healthcare industry, AI GPT is being utilized for medical research, drug discovery, and patient diagnosis. Additionally, AI GPT is being employed in the finance sector for fraud detection, risk assessment, and algorithmic trading. The versatility of AI GPT makes it a valuable tool in many other fields as well.

Training AI GPT

Data collection and preprocessing

Data collection and preprocessing are crucial steps in training AI GPT models. Data collection involves gathering a diverse and representative dataset that encompasses the target domain. This dataset should include a wide range of examples and cover various topics and contexts. Once the data is collected, it needs to be preprocessed to ensure its quality and suitability for training. Preprocessing may involve cleaning the data, removing duplicates, handling missing values, and normalizing the text.

To present structured, quantitative data related to data collection and preprocessing, a Markdown table can be used. The table can include information such as the number of documents in the dataset, the average document length, the distribution of document categories, and any other relevant statistics.

Here is an example of a Markdown table:

| Dataset Size | Average Document Length | Document Categories |

|---|---|---|

| 10,000 | 500 words | 5 |

It is important to note that the specific details of the table will depend on the dataset and the requirements of the AI GPT model being trained.

When it comes to less structured content, like steps or qualitative points, a bulleted or numbered list can be used. This allows for a clear and concise presentation of information. For example:

- Clean the data by removing irrelevant or noisy documents.

- Handle missing values by imputing or removing them.

- Normalize the text by applying techniques such as tokenization and stemming.

Lastly, it is worth mentioning that data collection and preprocessing can greatly impact the performance and effectiveness of AI GPT models. Properly collecting and preprocessing data ensures that the model is trained on high-quality and relevant information, leading to more accurate and reliable results.

Model architecture

The model architecture of AI GPT plays a crucial role in its performance and capabilities. It is designed as a deep neural network with multiple layers of transformers. Transformers are self-attention mechanisms that allow the model to focus on different parts of the input sequence when generating the output. This architecture enables AI GPT to capture complex patterns and dependencies in the data, making it highly effective in tasks such as language generation and text completion.

In addition to the transformers, AI GPT also incorporates positional encoding, which helps the model understand the sequential order of the input. This is particularly important in tasks that involve understanding the context and generating coherent responses. The combination of transformers and positional encoding allows AI GPT to generate high-quality and contextually relevant outputs.

To further enhance the model’s performance, AI GPT utilizes a large number of parameters, which are learned during the training process. These parameters enable the model to capture and represent a wide range of linguistic features, resulting in more accurate and diverse outputs.

Training process

The training process of AI GPT involves several steps to optimize the model’s performance. These steps include:

Data collection and preprocessing: Gathering a large and diverse dataset is crucial for training AI GPT. The data is preprocessed to remove noise, correct errors, and ensure consistency.

Model architecture: Designing the architecture of AI GPT involves determining the number of layers, the size of each layer, and the connections between them. This architecture affects the model’s capacity to learn and generate coherent responses.

Training process: The actual training of AI GPT involves feeding the preprocessed data into the model and adjusting the model’s parameters through an optimization algorithm. This process iterates multiple times to improve the model’s performance.

It is important to note that the training process of AI GPT can be computationally intensive and time-consuming, requiring powerful hardware and significant computational resources.

Fine-tuning AI GPT

Transfer learning

Transfer learning is a powerful technique in AI GPT that allows the model to leverage knowledge learned from one task to improve performance on another task. It is based on the idea that the knowledge gained from solving one problem can be applied to solve a related problem. In the context of AI GPT, transfer learning involves pretraining the model on a large dataset and then fine-tuning it on a specific task.

One important aspect of transfer learning in AI GPT is the use of attention. Attention allows the model to look at all the context words simultaneously, giving it a better understanding of the relationships between words and improving its ability to generate coherent and contextually relevant text.

Transfer learning in AI GPT can be further enhanced through the use of different types of transfer learning techniques. Chapter 14 of the free 15-chapter AI handbook titled ‘Transfer Learning – AI Perspectives’ provides a comprehensive explanation of the various types of transfer learning techniques used in AI GPT.

Domain adaptation

Domain adaptation is a crucial step in fine-tuning AI GPT models. It involves training the model on data from a specific target domain to improve its performance in that domain. This process allows the model to adapt to the specific characteristics and nuances of the target domain, making it more effective in generating relevant and accurate responses.

To achieve domain adaptation, several techniques can be employed. One common approach is to collect additional data from the target domain and include it in the training process. This helps the model learn the specific patterns and language used in the target domain, enhancing its ability to generate contextually appropriate responses.

Another technique is to fine-tune the model using transfer learning. By leveraging the knowledge and pre-trained weights from a base model, the fine-tuning process focuses on adapting the model to the target domain. This approach can significantly reduce the amount of labeled data required for training, making it more efficient and cost-effective.

It’s important to note that domain adaptation is not a one-time process. As the target domain evolves and new data becomes available, the model should be periodically retrained to ensure its continued effectiveness. Continuous improvement and adaptation are key to unlocking the full potential of AI GPT models.

Hyperparameter tuning

Hyperparameter tuning is a crucial step in fine-tuning AI GPT models. It involves finding the optimal values for the hyperparameters, which are parameters that are not learned during the training process but affect the model’s performance. Fine-tuning a GPT-like model requires a working understanding of hyperparameters and their mastery is vital to achieve the desired results. It is important to experiment with different values for hyperparameters to find the right balance and improve the model’s performance.

Challenges and Limitations

Ethical considerations

Ethical considerations are an important aspect when it comes to AI GPT. Some of the key ethical considerations include bias in training data, misinformation and disinformation generated by GPT-3, privacy concerns, the impact on society, and potential job displacement. It is crucial to address these ethical concerns to ensure the responsible and ethical use of AI GPT.

Bias and fairness

Bias and fairness are important considerations when working with AI GPT. Bias refers to the systematic favoritism or prejudice towards certain groups or individuals, which can lead to unfair outcomes. It is crucial to address and mitigate bias in AI models to ensure fairness and avoid perpetuating discrimination.

One way to address bias is through data preprocessing. By carefully selecting and preprocessing the training data, we can reduce bias and ensure that the model learns from a diverse and representative dataset.

Another important aspect is evaluation metrics. It is essential to choose appropriate metrics that capture fairness and avoid reinforcing existing biases. For example, using demographic parity or equal opportunity as evaluation metrics can help ensure fairness in AI GPT models.

Additionally, it is important to involve diverse perspectives and stakeholders in the development and deployment of AI GPT systems. This can help identify and address potential biases and ensure that the technology is used in a fair and equitable manner.

To summarize, addressing bias and ensuring fairness in AI GPT is crucial. By carefully selecting and preprocessing data, choosing appropriate evaluation metrics, and involving diverse perspectives, we can work towards creating AI systems that are fair and unbiased.

Security and privacy

When it comes to AI GPT models, ensuring security and privacy is of utmost importance. The security of a GPT model encompasses data protection, model integrity, and user privacy. It’s crucial to recognize the potential risks, including unauthorized access, data breaches, and misuse of personal information. Organizations should implement robust security measures to safeguard the AI GPT system.

In addition to security, privacy is another critical aspect. Users should have control over their data and be informed about how it is being used. Transparency in data collection and usage practices is essential to build trust with users. Organizations should adhere to privacy regulations and implement mechanisms to protect user privacy.

To ensure both security and privacy, organizations can follow best practices such as:

- Implementing secure data storage and access controls

- Regularly auditing and monitoring the AI GPT system

- Conducting privacy impact assessments

- Providing clear and concise privacy policies

By prioritizing security and privacy, organizations can mitigate risks and build trust with users.

Best Practices for AI GPT

Data quality and diversity

Ensuring data quality and diversity is crucial for training an effective AI GPT model. High-quality data helps improve the accuracy and reliability of the generated responses, while diverse data ensures that the model can handle a wide range of topics and contexts.

To achieve data quality, it is important to:

- Clean and preprocess the data to remove noise, errors, and irrelevant information.

- Validate the data to ensure it is accurate and reliable.

- Augment the data by adding variations and different perspectives.

In addition to data quality, diversity plays a significant role in enhancing the model’s performance. By including data from various sources, domains, and languages, the model becomes more versatile and capable of generating relevant responses in different scenarios.

Tip: Regularly evaluate and update the training data to maintain its quality and diversity, as new trends and topics emerge.

Human oversight and control

Human oversight and control play a crucial role in the deployment and management of AI GPT models. While AI GPT is designed to generate human-like text, it is important to have human supervision to ensure the quality and ethical standards of the generated content.

One way to exercise human oversight is through the use of human reviewers who review and rate the generated outputs. These reviewers can provide feedback and flag any potential biases, inaccuracies, or inappropriate content. Their expertise and judgment help in refining the model and improving its performance.

Additionally, establishing clear guidelines and policies for the use of AI GPT is essential. These guidelines should outline the intended use cases, potential limitations, and ethical considerations. They should also define the boundaries within which the AI GPT model operates, ensuring that it is used responsibly and in compliance with legal and ethical standards.

It is important to strike a balance between the capabilities of AI GPT and the need for human oversight and control. While AI GPT can automate many tasks and generate high-quality content, human involvement is necessary to ensure accountability, fairness, and the prevention of potential risks or biases.

Continuous improvement

Continuous improvement is a crucial aspect of harnessing the power of AI GPT. By constantly refining and enhancing the model, organizations can ensure that it stays up-to-date and delivers accurate and relevant results. There are several strategies that can be employed to achieve continuous improvement:

Feedback Loop: Establishing a feedback loop with users and incorporating their input into the model’s training process can help identify areas for improvement and address any shortcomings.

Monitoring and Evaluation: Regularly monitoring the performance of the AI GPT model and evaluating its outputs against predefined metrics can provide insights into its strengths and weaknesses. This information can then be used to guide further improvements.

Collaboration and Knowledge Sharing: Encouraging collaboration among researchers, developers, and users of AI GPT can foster a culture of continuous learning and improvement. Sharing knowledge, best practices, and lessons learned can accelerate progress in the field.

Experimentation and Innovation: Embracing a culture of experimentation and innovation allows organizations to explore new techniques, algorithms, and approaches to enhance the capabilities of AI GPT. By pushing the boundaries of what is possible, breakthroughs can be achieved.

In summary, continuous improvement is essential for maximizing the potential of AI GPT. By implementing feedback loops, monitoring performance, fostering collaboration, and embracing experimentation, organizations can ensure that AI GPT evolves and remains at the forefront of AI technology.

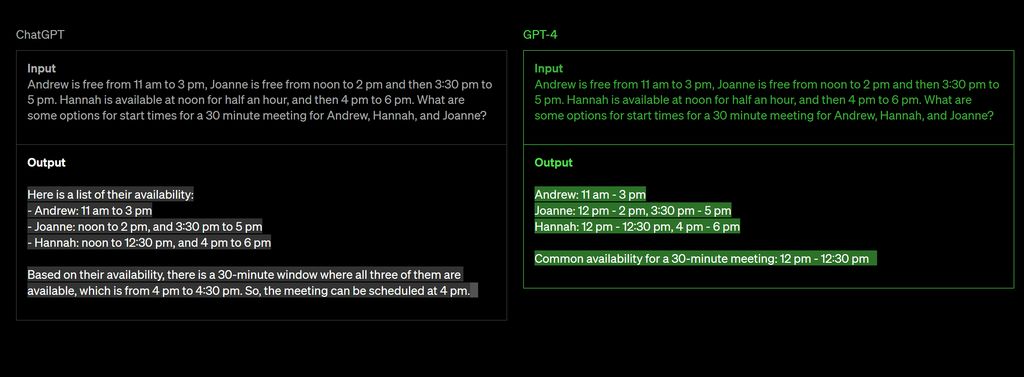

ChatGPT is an advanced conversational AI model developed by OpenAI. It has revolutionized the way we interact with AI systems, providing a more natural and engaging experience. With its powerful language generation capabilities, ChatGPT can be used in various applications such as customer support, virtual assistants, and content creation. If you want to experience the power of ChatGPT, visit our website and explore the best practices for AI GPT.

Conclusion

In conclusion, AI GPT is a powerful tool that has the potential to revolutionize various industries. Its ability to generate human-like text and understand context makes it a valuable asset for tasks such as content creation, customer support, and data analysis. By harnessing the power of AI GPT, businesses can streamline their operations, improve efficiency, and enhance the overall user experience. As AI technology continues to advance, it is important for organizations to stay updated and explore the possibilities that AI GPT offers. Embracing this technology can unlock new opportunities and drive innovation in the digital age.

Frequently Asked Questions

What is AI GPT?

AI GPT stands for Artificial Intelligence Generative Pre-trained Transformer. It is a state-of-the-art language model developed by OpenAI.

How does AI GPT work?

AI GPT works by using a transformer architecture, which allows it to process and generate human-like text based on the input it receives.

What are the applications of AI GPT?

AI GPT has a wide range of applications, including natural language understanding, text generation, chatbots, content creation, and more.

How is AI GPT trained?

AI GPT is trained using a large dataset of text from the internet. It learns from the patterns and structures in the data to generate coherent and contextually relevant responses.

Can AI GPT be fine-tuned for specific tasks?

Yes, AI GPT can be fine-tuned on specific datasets to perform tasks such as sentiment analysis, language translation, summarization, and more.

What are the challenges and limitations of AI GPT?

Some challenges and limitations of AI GPT include ethical considerations, potential bias in generated text, and the need for human oversight to ensure responsible use.