Overview

Introduction to ChatGPT

ChatGPT is an advanced language model that has been trained on a vast amount of data. It is designed to generate human-like responses to user inputs, making it a powerful tool for conversational applications. The training process involves feeding the model with a wide range of text sources, including books, articles, and websites, to expose it to diverse language patterns and concepts. This enables ChatGPT to understand and generate coherent and contextually relevant responses. The model’s performance is continuously improved through an iterative process of fine-tuning and feedback from human reviewers. ChatGPT has the potential to revolutionize various industries, including customer support, content creation, and language translation.

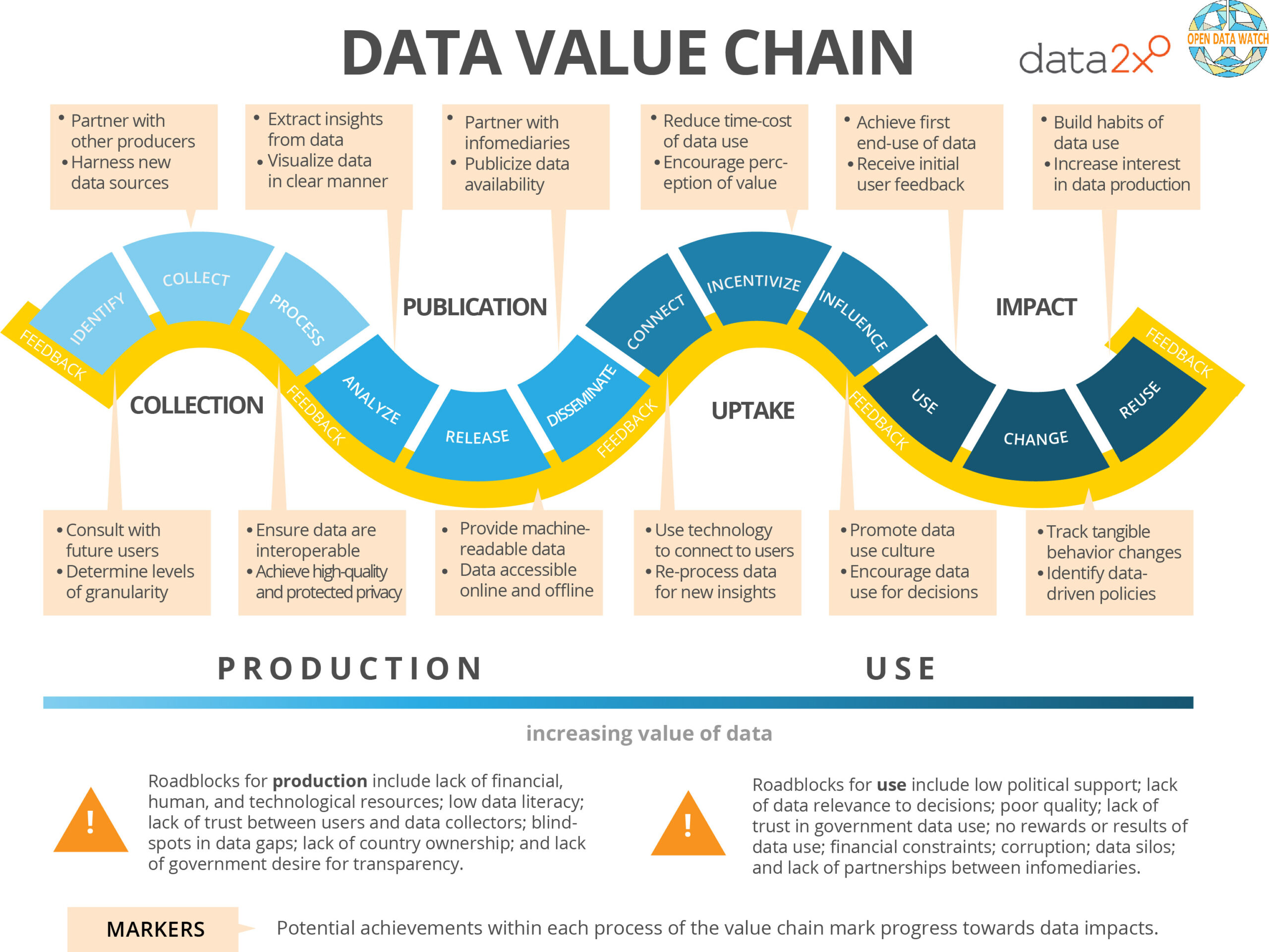

Importance of training data

Training data is the foundation of any machine learning model. It provides the necessary information for the model to learn and make predictions. Without high-quality training data, the accuracy and performance of the model can be compromised. AI innovation heavily relies on training data as it enables the model to understand patterns, make connections, and generate intelligent responses. It is crucial to ensure that the training data is diverse, representative, and up-to-date to achieve the best results.

Role of data in improving ChatGPT

Data plays a crucial role in improving ChatGPT. By providing a large and diverse dataset, we can unlock AI conversations that are more accurate and contextually relevant. The data helps ChatGPT to learn patterns, understand language nuances, and generate more coherent responses. Additionally, the data allows us to identify and address biases, ensuring that ChatGPT provides fair and unbiased interactions. With the right data, we can continuously train and fine-tune ChatGPT to enhance its performance and deliver a better user experience.

Data Collection Process

Defining the objectives

To effectively train ChatGPT, it is essential to clearly define the objectives. This includes identifying the desired communication skills that the model should possess. These skills may include the ability to engage in natural and coherent conversations, understand and respond to user queries accurately, and provide informative and helpful responses. By defining these objectives, we can ensure that the training process focuses on developing the necessary capabilities in the model.

Identifying relevant data sources

To train ChatGPT with advanced capabilities, it is crucial to identify relevant data sources. These sources can include a variety of text corpora, such as online forums, social media platforms, and books. Additionally, specialized datasets related to specific domains or topics can be valuable for training. It is important to carefully curate the data to ensure its quality and relevance. Furthermore, data augmentation techniques can be applied to increase the diversity and size of the training data. By incorporating a wide range of high-quality data sources, ChatGPT can be trained to understand and generate responses with advanced capabilities.

Implementing data collection methods

To train ChatGPT effectively, it is crucial to implement robust data collection methods. These methods enable the acquisition of diverse and high-quality data, which is essential for improving the performance of the model. Some of the top resources for data collection include online forums, social media platforms, and public datasets. Online forums provide a wealth of user-generated content, allowing for a wide range of conversational data. Social media platforms offer real-time interactions and discussions, capturing the latest trends and topics. Public datasets, on the other hand, provide curated and structured data that can be used for specific domains or tasks. By leveraging these top resources, developers can gather the necessary data to enhance ChatGPT’s conversational abilities.

Data Preprocessing

Cleaning and filtering the data

Cleaning and filtering the data is a crucial step in training ChatGPT. This process helps ensure that the model is trained on high-quality and relevant information, which in turn improves its ability to generate accurate and coherent responses. Revolutionize your digital experience by carefully curating the dataset used for training. To achieve this, several techniques can be employed, including removing duplicate entries, eliminating irrelevant or biased content, and applying language-specific preprocessing such as tokenization and stemming. Additionally, it is important to consider the ethical implications of the data used and ensure that it aligns with the desired outcomes and values of the application.

Removing sensitive information

To ensure the privacy and security of users, it is crucial to remove any sensitive information that may be shared during conversations with the advanced chatbot. This includes personal identifying information such as names, addresses, phone numbers, and financial data. Additionally, any confidential or proprietary information should also be filtered out to prevent unauthorized access or misuse. Implementing robust data sanitization techniques and regularly updating the chatbot’s filtering capabilities are essential in maintaining a safe and trustworthy user experience.

Handling missing or incomplete data

When training ChatGPT, it is crucial to address the issue of missing or incomplete data. Missing data refers to the absence of certain information, while incomplete data refers to data that is partially available. Both types of data can pose challenges in the training process. To handle missing data, one approach is to impute the missing values using techniques such as mean imputation or regression imputation. Another approach is to exclude the incomplete data from the training set. However, it is important to carefully consider the impact of these decisions on the model’s performance and generalizability. Milestone moments in the training process should be identified and monitored to ensure the model is learning effectively and not being adversely affected by missing or incomplete data.

Training and Fine-tuning

Preparing the data for training

To train ChatGPT effectively, it is crucial to carefully prepare the data. This involves cleaning and preprocessing the data to ensure its quality and consistency. Additionally, it is important to annotate the data with relevant information such as questions that users might ask. One way to do this is by creating a table that includes different question types and their corresponding answers. This table can serve as a reference during the training process, helping ChatGPT generate accurate and relevant responses. Another useful technique is to create lists of common phrases or expressions that users might use when interacting with the chatbot. These lists can be used to train the model to recognize and respond to specific user inputs effectively. By carefully preparing the data, we can enhance the performance and reliability of ChatGPT.

Choosing the right model architecture

When it comes to training ChatGPT, choosing the right model architecture is crucial. The model architecture determines the overall performance and capabilities of the chatbot. There are several popular architectures available, such as GPT-3, GPT-4, and BERT. Each architecture has its strengths and weaknesses, so it’s important to carefully evaluate which one best suits your needs. Factors to consider include the size of the model, computational requirements, and the specific task at hand. Additionally, staying up-to-date with the latest advancements in natural language processing technology is essential to ensure that you are leveraging the most advanced models for your chatbot.

Fine-tuning the model with additional data

To improve the performance of ChatGPT, the model can be fine-tuned with additional data. Fine-tuning involves training the model on a specific dataset to adapt it to a particular task or domain. This process allows the model to learn from more examples and generalize better. One way to obtain additional data is by using writing tools such as language models and text generation techniques. These tools can generate synthetic data that can be used to augment the training set. Another approach is to collect data from various sources, such as online forums or customer support chats, and use it to fine-tune the model. By incorporating diverse data, the model can be trained to handle a wide range of user queries and provide more accurate and relevant responses.