Overview

Introduction to ChatGPT

ChatGPT is an advanced language model developed by OpenAI that is capable of generating human-like responses. It uses a deep learning architecture called the Transformer to understand and generate text. ChatGPT has been trained on a vast amount of data from the internet, allowing it to generate coherent and contextually relevant responses. However, while ChatGPT has shown impressive capabilities, it also comes with potential risks and limitations that need to be considered.

Benefits of ChatGPT

ChatGPT has several benefits that make it a valuable tool for various applications. First, it allows for interactive and dynamic conversations with users, providing a more engaging and personalized experience. Second, it can assist users in finding information quickly and accurately, making it a useful tool for research and information retrieval. Third, ChatGPT can be utilized for customer support, answering frequently asked questions and providing assistance in real-time. Fourth, it can be used as a language learning tool, allowing users to practice their language skills and receive feedback. Lastly, ChatGPT can be integrated into various platforms and applications, making it accessible and convenient for users.

Limitations of ChatGPT

ChatGPT, although impressive in its ability to generate coherent and contextually relevant responses, has some notable limitations. One of the main limitations is its Language Capabilities. While ChatGPT can understand and generate text in multiple languages, its proficiency varies across different languages. It tends to perform better in languages with large training datasets, such as English, compared to languages with limited training data. Additionally, ChatGPT may struggle with understanding and generating accurate responses for complex or technical topics in any language. It is important to keep these limitations in mind when using ChatGPT for language-intensive tasks.

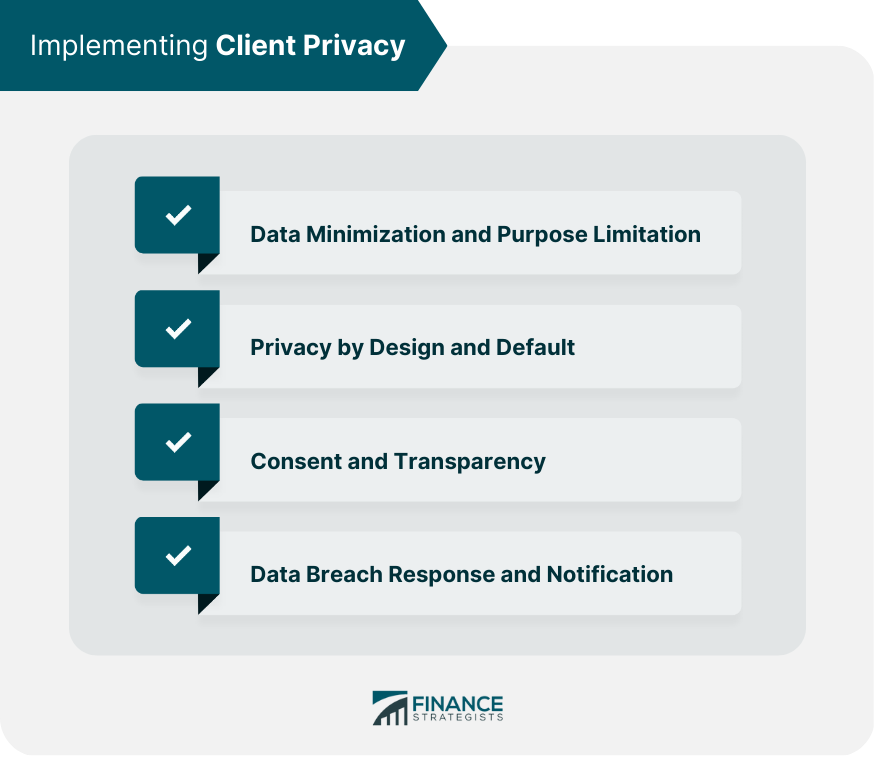

Privacy Concerns

Data Privacy

Data privacy is a major concern when it comes to ChatGPT. As an AI language model, ChatGPT requires access to a large amount of data to generate responses. This data can include personal information and sensitive details shared during conversations. While efforts are made to anonymize and protect user data, there is always a risk of potential breaches or unauthorized access. It is important for users to be cautious about the information they share with ChatGPT and to understand the privacy policies and safeguards in place.

Security Risks

While ChatGPT has shown advanced capabilities in generating human-like responses, there are potential risks and limitations that need to be considered. One of the main concerns is the security aspect of using ChatGPT. As an AI language model, ChatGPT relies on a vast amount of data, including user inputs, to generate responses. This raises concerns about the privacy and confidentiality of the information shared during conversations. Additionally, ChatGPT may be susceptible to malicious attacks, such as generating inappropriate or harmful content. It is crucial to implement robust security measures to mitigate these risks and ensure the safe usage of ChatGPT.

Ethical Considerations

When it comes to the ethical considerations of using ChatGPT, there are several potential risks and limitations to be aware of. One of the main concerns is the ability of the model to engage in harmful or offensive behavior. While OpenAI has made efforts to mitigate this issue, there is still a possibility that the system may generate inappropriate or biased responses. Another important consideration is the potential for the model to reinforce existing biases or perpetuate harmful stereotypes. ChatGPT learns from the data it is trained on, which can include biased or unrepresentative information. This can lead to the generation of responses that reflect and amplify societal biases. It is crucial to continuously monitor and evaluate the outputs of ChatGPT to ensure that it is used responsibly and in a manner that respects ethical guidelines and values.

Accuracy and Reliability

Potential for Misinformation

While ChatGPT has shown great promise in generating human-like responses, there are potential risks and limitations to consider. One of the main concerns is the potential for misinformation. As an AI language model, ChatGPT relies on the data it has been trained on, and there is a possibility that it may generate inaccurate or misleading information. This is particularly true when it comes to complex or controversial topics where the model may not have access to reliable or up-to-date information. Additionally, the natural language processing capabilities of ChatGPT may not always accurately understand the context or nuances of a conversation, leading to responses that may be taken out of context or misunderstood. It is important to critically evaluate the information provided by ChatGPT and verify it from reliable sources.

Bias in Responses

One potential risk of ChatGPT is the presence of bias in its responses. Since ChatGPT is trained on a large amount of text data from the internet, it can inadvertently learn and reproduce biases present in that data. This can result in biased or discriminatory responses to certain inputs. For example, if the training data contains biased information about a particular group of people, ChatGPT may generate responses that perpetuate those biases. It is important to note that bias in ChatGPT’s responses is not intentional, but rather a reflection of the biases in the training data. To mitigate this risk, OpenAI has implemented measures to reduce bias, such as fine-tuning the model on a narrower dataset and using guidelines to address potential biases. However, addressing bias completely remains a challenging task, and ongoing efforts are needed to ensure fair and unbiased responses from ChatGPT.

Lack of Fact-Checking

While the AI-powered chatbot technology has shown great promise in various applications, one of its potential risks is the lack of fact-checking. As these chatbots generate responses based on patterns and data, there is a possibility of spreading misinformation or inaccurate information. Without proper fact-checking mechanisms in place, users may unknowingly consume and share false information. This can have serious consequences, especially in areas such as news reporting, medical advice, or legal guidance. To address this limitation, it is crucial to develop robust fact-checking systems that can verify the accuracy of information provided by chatbots.

User Dependency and Addiction

Overreliance on ChatGPT

ChatGPT has undoubtedly revolutionized the way we communicate and interact online. However, it is important to recognize the potential risks and limitations associated with overreliance on this AI model. One of the key concerns is the lack of contextual understanding. ChatGPT may generate responses that are factually incorrect or inappropriate in certain situations. Additionally, there is a risk of bias reinforcement. If ChatGPT is fed with biased or prejudiced data, it may unknowingly perpetuate harmful stereotypes or discriminatory behavior. Moreover, the model’s responses are influenced by the data it was trained on, which may not adequately represent the diverse perspectives and experiences of South African Users. It is crucial to use ChatGPT responsibly and critically evaluate its outputs to mitigate these risks and ensure a more inclusive and accurate conversation.

Potential for Addiction

While ChatGPT has proven to be a powerful tool for generating human-like text, there are potential risks and limitations that need to be considered. One of the concerns is the potential for addiction. OpenAI‘s ChatGPT is designed to be highly engaging and interactive, which can make it addictive for some users. The constant availability and responsiveness of the AI model may lead to excessive use and dependency. It is important for users to be aware of their usage patterns and set boundaries to prevent potential addiction.

Impact on Social Interactions

ChatGPT has the potential to greatly impact social interactions, both positively and negatively. On the positive side, it can facilitate communication between individuals who speak different languages or have difficulty expressing themselves verbally. It can also provide a safe space for people to practice social skills and gain confidence in their interactions. However, there are also potential risks and limitations to consider. For parents, there may be concerns about the influence of ChatGPT on their children’s social development and the possibility of exposure to inappropriate content. Additionally, reliance on ChatGPT for social interactions may lead to a decrease in face-to-face communication and the development of essential social skills.