Overview

Introduction to ChatGPT

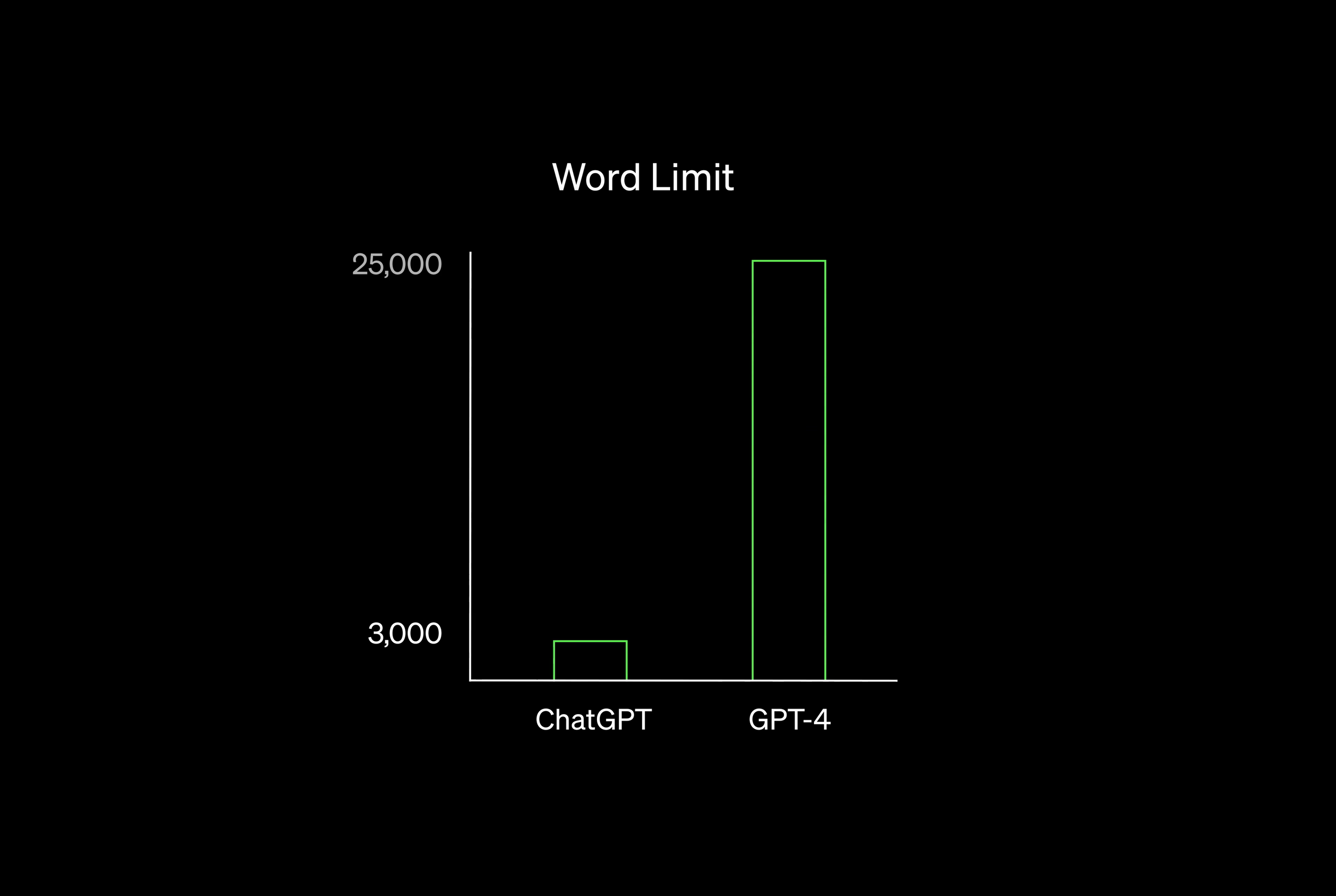

ChatGPT is an advanced language model developed by OpenAI. It is the result of continuous improvement and iteration from its predecessor, GPT-3. ChatGPT is designed to generate human-like responses in conversational settings. With its ability to understand context and provide coherent answers, ChatGPT has revolutionized the way people interact with AI. This article explores the journey of ChatGPT from its initial version to the present day, highlighting its key milestones and advancements.

Evolution of GPT models

The development of GPT models has seen significant advancements over the years. Starting with GPT-1, which was released in 2018, the models have gradually improved in terms of performance and capabilities. GPT-1 utilized a transformer architecture and was trained on a large corpus of text data. It had the ability to generate coherent and contextually relevant responses to given prompts. However, it had limitations in understanding complex queries and providing accurate answers. Subsequent versions like GPT-2 and GPT-3 addressed these limitations to a great extent, incorporating larger models and more diverse training data. The latest iteration, ChatGPT, builds upon the foundation of GPT-3 and is specifically designed for conversational interactions. It demonstrates impressive language understanding and generates highly engaging responses. The evolution of GPT models has paved the way for more advanced and interactive AI-powered conversations.

Key features of ChatGPT

ChatGPT is an advanced language model developed by OpenAI. It is designed to generate human-like responses in conversational settings. The model has undergone several iterations, starting with GPT-1 and evolving into the current version, ChatGPT. The development of ChatGPT has been driven by the goal of improving natural language understanding and generating coherent and contextually relevant responses. The model incorporates techniques such as transformer architectures and fine-tuning on large datasets. It has been trained on a wide range of internet text to ensure its ability to handle diverse topics and provide informative and engaging conversations.

Development of GPT-1

Introduction to GPT-1

GPT-1, short for Generative Pre-trained Transformer 1, was the first in a series of language models developed by OpenAI. It was released in June 2018 and quickly gained attention for its impressive ability to generate coherent and contextually relevant text. GPT-1 was a significant milestone in natural language processing, as it showcased the power of transformer-based models in generating human-like text. Although GPT-1 had its limitations, such as occasional nonsensical responses and lack of fine-grained control, it laid the foundation for subsequent models like ChatGPT. With its user-friendly tool, GPT-1 enabled researchers and developers to explore the possibilities of language generation and paved the way for the advancements in conversational AI.

Training process of GPT-1

The training process of GPT-1 involved several steps. First, a large dataset of text was collected from the internet. This dataset was then preprocessed to remove any irrelevant information and to ensure the data was in a suitable format for training. Next, the data was fed into the GPT-1 model, which consisted of multiple layers of neural networks. The model was trained using a process called unsupervised learning, where it learned to predict the next word in a sentence based on the context provided by the previous words. The training process involved iteratively updating the model’s parameters to minimize the difference between its predictions and the actual next words in the dataset. This process was computationally intensive and required powerful hardware. Finally, after several iterations of training, the GPT-1 model was fine-tuned to improve its performance on specific tasks. Cheat Sheets were created to help users understand and use the model effectively.

Limitations of GPT-1

Despite its impressive capabilities, GPT-1 had several limitations that needed to be addressed. One major concern was the lack of regulation of AI, which raised ethical and safety issues. Additionally, GPT-1 struggled with understanding context and often produced nonsensical or biased responses. The model also had a tendency to be overly verbose, generating long-winded answers that lacked conciseness. These limitations highlighted the need for further research and development to improve the performance and reliability of language models.

Advancements in GPT Models

Introduction to GPT-2

GPT-2, short for Generative Pre-trained Transformer 2, is a state-of-the-art language model developed by OpenAI. It is the successor to GPT-1 and has significantly improved performance and capabilities. GPT-2 was trained on a massive dataset comprising of diverse sources from the internet, allowing it to generate coherent and contextually relevant text. The model consists of 1.5 billion parameters, making it one of the largest language models ever created. GPT-2 has been widely used in various applications such as text generation, translation, and chatbots. It has also garnered attention and controversy due to its potential misuse, leading OpenAI to initially limit its release. However, in subsequent years, OpenAI has released larger versions of GPT, including ChatGPT, which has been made available for public use.

Enhancements in GPT-2

GPT-2, the successor to GPT-1, brought several enhancements to the model. First, it increased the capacity of the model by significantly increasing the number of parameters. GPT-2 also introduced a new training objective called unsupervised fine-tuning, which allowed the model to be fine-tuned on specific tasks. Additionally, GPT-2 improved the performance of the model by reducing the number of capacity challenges that were present in GPT-1. Overall, these enhancements made GPT-2 a more powerful and versatile language model.

Introduction to GPT-3

GPT-3, short for Generative Pre-trained Transformer 3, is a state-of-the-art language model developed by OpenAI. It is the third iteration of the GPT series and was released on June 11, 2020. GPT-3 is known for its impressive ability to generate human-like text and has been widely used in various applications such as language translation, content generation, and even coding assistance. With its massive 175 billion parameters, GPT-3 is capable of understanding and producing contextually relevant responses. Since its release, GPT-3 has garnered significant attention and has pushed the boundaries of what is possible in natural language processing.

Introducing ChatGPT

Introduction to ChatGPT

ChatGPT is an advanced language model developed by OpenAI. It is the latest iteration in the GPT series, which stands for Generative Pre-trained Transformer. The GPT series has revolutionized natural language processing and has had a significant impact on various fields. ChatGPT, in particular, has been designed to enable interactive and dynamic conversations with users. With its ability to generate coherent and contextually relevant responses, ChatGPT has the potential to revolutionize business operations by providing efficient and personalized customer support, improving chatbot experiences, and enhancing virtual assistants.

Training process of ChatGPT

The training process of ChatGPT involves several steps that utilize advanced techniques in natural language processing. These techniques include pretraining and fine-tuning. In the pretraining phase, the model is trained on a large corpus of text data to learn the statistical patterns of language. This helps the model develop a general understanding of grammar, syntax, and semantics. After pretraining, the model undergoes fine-tuning, where it is trained on a more specific dataset with human feedback. This fine-tuning process helps the model improve its ability to generate coherent and contextually relevant responses. The combination of these steps enables ChatGPT to provide more accurate and engaging conversations.

Conclusion

In conclusion, the development of ChatGPT has been a significant milestone in the evolution of language models. From its inception with GPT-1 to the latest version of ChatGPT, OpenAI has continuously pushed the boundaries of what is possible with natural language processing. ChatGPT has demonstrated impressive capabilities in generating coherent and contextually relevant responses. It has shown promise in a wide range of applications, including customer support, content creation, and language understanding. As the technology continues to advance, we can expect even more sophisticated and human-like interactions with ChatGPT in the future.