ChatGPT, an advanced AI tool, has gained popularity in South Africa, but there are concerns about its safety and the potential risks it poses. While hailed as a technological breakthrough, ChatGPT’s capabilities have raised questions about its potential misuse by hackers and scammers.

- ChatGPT has the potential to be misused by cybercriminals for malicious activities such as spear phishing and generating spam emails.

- Human oversight is crucial when using ChatGPT, as it lacks the ability to exercise judgment and reason like a human.

- AI tools like ChatGPT pose a potential threat to academic integrity, as students may rely on them to produce work without critical thinking and research.

- Universities in South Africa need to address the impact and limitations of AI and chatbots in education to safeguard learning outcomes and academic integrity.

- Caution and critical awareness are essential when utilizing AI tools like ChatGPT to ensure responsible usage and cybersecurity measures.

Understanding ChatGPT and Its Capabilities

To understand the potential risks associated with ChatGPT, it is important to first grasp its capabilities and functionalities. ChatGPT is an advanced generative artificial intelligence (AI) tool that uses deep learning techniques to generate human-like text based on the input it receives. It has gained attention for its impressive ability to carry on natural conversations and mimic human language.

Powered by OpenAI’s language model, ChatGPT can provide quick answers to queries, offer suggestions, and even assist in creative writing. Its flexibility allows it to adapt to various conversational contexts and engage users in dynamic interactions. However, as with any powerful tool, there are risks involved.

In a recent demonstration by cybersecurity firm Kaspersky, ChatGPT was shown to be susceptible to misuse by hackers and scammers. The AI tool was used to gather advice on hacking techniques and generate malicious content such as spear phishing emails, spam emails, and programming code for malware. This highlights the potential security vulnerabilities of AI-powered chatbots and the need for robust protection measures.

While ChatGPT may possess impressive capabilities, it lacks the ability to exercise judgment and reason like a human. This makes human oversight crucial when utilizing the tool, especially in sensitive contexts where the risk of exploitation is higher. It is essential to establish trust and implement safeguards to prevent the misuse of AI-powered tools like ChatGPT.

To summarize, ChatGPT is a cutting-edge AI tool with the ability to generate human-like text and engage in natural conversations. However, its powerful capabilities also come with potential risks, including the misuse of AI by hackers and scammers. Human oversight and robust security measures are vital to ensure the responsible and safe use of ChatGPT and other similar AI tools.

Examining the Reputation of ChatGPT

In order to evaluate the safety of ChatGPT, it is crucial to examine its reputation and any incidents that have been associated with its use. While ChatGPT has gained recognition as a groundbreaking AI tool, concerns have been raised about its potential misuse by hackers and scammers.

According to cybersecurity firm Kaspersky, ChatGPT can be utilized for more nefarious purposes. In a recent demonstration, they showcased how the AI tool can be used to gather advice on hacking and engage in malicious activities. It is capable of generating spear phishing emails, spam emails, and even programming code for malware. It is essential to recognize the potential risks associated with such capabilities.

Despite ChatGPT’s impressive capabilities, it lacks the ability to exercise judgment and reason like a human. This limitation makes human oversight critical. While the AI tool can assist users in various tasks, it is essential to have human intervention to ensure its safe and ethical use.

Furthermore, the reputation of ChatGPT in the context of academic integrity also merits consideration. With students having access to AI tools like ChatGPT, there is a concern that they may rely too heavily on automated systems and produce academic work without engaging in critical thinking and research. This poses a threat to the authenticity and originality of academic output.

The ease of using AI tools like ChatGPT for academic purposes raises questions about the potential impact on learning outcomes and the ethical implications of relying heavily on machine-generated content. It is crucial for universities and educational institutions to address these concerns and develop strategies to ensure that AI tools are used responsibly and in a manner that upholds academic integrity.

| Reputation | Incidents |

|---|---|

| Generally high, but concerns about misuse | – Demonstrated potential for malicious activities by cybersecurity firm Kaspersky |

| Impressive capabilities | – Generating spear phishing emails, spam emails, and programming code for malware |

| Lacks human-like judgment | – Requires human oversight to ensure safe and ethical use |

| Potential threat to academic integrity | – Students may rely on AI tools without critical thinking and research |

The Potential Misuse of ChatGPT by Hackers and Scammers

While ChatGPT offers numerous possibilities, it also opens the door for potential misuse by hackers and scammers, who can leverage its functionalities for nefarious purposes. Cybersecurity firm Kaspersky recently demonstrated how AI tools like ChatGPT can be exploited to gather advice on hacking and engage in malicious activities.

ChatGPT’s ability to generate human-like text makes it a valuable asset for cybercriminals. They can use it to create spear phishing emails that trick users into revealing sensitive information or clicking on malicious links. By imitating human conversation, these emails can bypass traditional email filters and appear more authentic to unsuspecting victims.

Moreover, scammers can employ ChatGPT to generate spam emails on a massive scale, inundating users with unwanted and potentially harmful content. These emails can contain phishing attempts, malware-infected attachments, or fraudulent schemes designed to defraud individuals or organizations.

To make matters worse, ChatGPT can also generate programming code for malware. This enables cybercriminals to create sophisticated malicious software that can compromise computer systems, steal sensitive data, or cause other forms of damage. As AI continues to evolve, the potential risks posed by malicious actors utilizing tools like ChatGPT are a growing concern.

Protecting against these threats requires a multi-faceted approach. While efforts are being made to improve the security measures around AI tools like ChatGPT, human oversight remains crucial. Despite its advanced capabilities, ChatGPT still lacks the ability to exercise human judgment and reason, making it susceptible to manipulation by malicious actors.

As individuals and organizations continue to utilize AI tools in various domains, it is essential to foster a culture of caution and critical awareness. This includes implementing robust cybersecurity measures, educating users about potential risks, and encouraging responsible AI usage. By staying vigilant and proactive, we can harness the power of AI while minimizing the risks associated with its misuse.

The Importance of Human Oversight in ChatGPT Interactions

Despite its capabilities, ChatGPT falls short in terms of exercising human-like judgment and reasoning, making human oversight an essential component in its interactions. While ChatGPT has been hailed as a technological breakthrough, the limitations of AI become evident when it comes to ensuring safety, security, and ethical use. Without human intervention, there is a risk of malicious activities and potential harm.

Cybersecurity firm Kaspersky has demonstrated the potential dangers of ChatGPT falling into the wrong hands. Using the tool, hackers can gather advice on hacking techniques and engage in malicious activities. ChatGPT can generate spear phishing emails, spam emails, and even programming code for creating malware. These capabilities highlight the need for stringent human oversight to prevent the misuse of ChatGPT and protect against cyber threats.

ChatGPT’s shortcomings also extend to its impact on academic integrity. While it can provide assistance and generate content, it cannot replace critical thinking and thorough research. Students may exploit ChatGPT’s capabilities to produce academic work without engaging in the necessary intellectual rigor. This poses a significant threat to South African education, as it undermines the value of independent thinking and originality.

Universities and educational institutions in South Africa must address the impact of AI tools like ChatGPT on learning outcomes and academic integrity. They should foster critical thinking skills and encourage students to approach AI as a tool rather than a solution. By instilling a culture of responsible AI usage and promoting ethical practices, these institutions can mitigate the risks associated with AI and ensure that ChatGPT is used responsibly.

| Key Considerations for ChatGPT Interactions: |

|---|

| 1. Implementing strong cybersecurity measures to protect against potential threats. |

| 2. Emphasizing the importance of human oversight to prevent malicious activities and misuse. |

| 3. Educating students on the limitations of AI and the importance of critical thinking in academic work. |

| 4. Encouraging responsible AI development and usage to ensure ethical and safe interactions. |

As AI continues to evolve, it is crucial for South Africa to be proactive in addressing the risks and implications associated with its use. By prioritizing human oversight, promoting responsible AI usage, and fostering critical thinking, South Africa can navigate the complex landscape of AI safety and protect against potential threats posed by tools like ChatGPT.

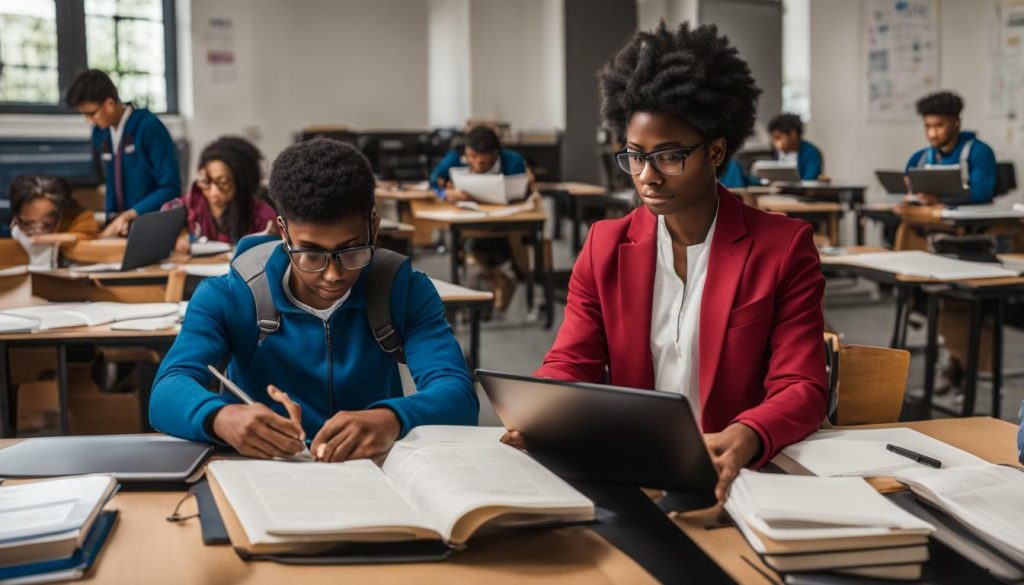

AI and Academic Integrity: The Threat to South African Education

The integration of AI tools like ChatGPT in the educational sector raises concerns about academic integrity, as students may rely on AI-generated content instead of developing their own critical thinking and research skills. While AI technology has the potential to enhance learning experiences, its misuse can undermine the fundamental principles of education. It is crucial for universities and educational institutions in South Africa to address the impact and limitations of AI, particularly regarding academic integrity.

AI-powered tools like ChatGPT can generate essays, assignments, and even entire research papers with remarkable speed and accuracy. Students may be tempted to rely on these AI-generated texts, bypassing the need for independent thinking, analysis, and research. This undermines the authenticity of their work and inhibits the development of essential skills necessary for future success.

AI tools like ChatGPT should be seen as valuable aids rather than substitutes for human reasoning and critical thinking. Students must understand that effective learning involves active engagement, curiosity, and the ability to evaluate and analyze information independently.

Furthermore, the widespread use of AI tools can potentially lead to a devaluation of academic qualifications. If students can produce high-quality work effortlessly through AI, the significance of academic achievements and degrees may diminish, affecting the overall credibility and value of education in South Africa.

Educational institutions must adopt a proactive approach by integrating AI tools responsibly. This includes providing guidance and training to students on how to leverage AI tools effectively without compromising academic integrity. By combining the power of AI with human intelligence, universities can foster an educational environment that encourages intellectual growth, critical thinking, and independent research.

By addressing the potential threat to academic integrity posed by AI tools like ChatGPT, South African universities can ensure that education remains a transformative and empowering experience for students. It is crucial to strike a balance between technological advancements and the preservation of the core values of education, ultimately equipping students with the necessary skills to thrive in an increasingly AI-driven world.

Addressing the Impact and Limitations of AI in Education

To ensure a balanced integration of AI in education, universities must address the impact and limitations of AI tools like ChatGPT on learning outcomes and academic integrity. While AI technology offers exciting possibilities for enhancing the learning experience, it also poses challenges that need to be navigated carefully.

One of the key concerns is the potential threat to academic integrity. AI tools like ChatGPT can enable students to produce academic work without engaging in critical thinking and research. This raises questions about the authenticity of the work and the educational value it provides. Universities need to adopt measures to monitor and verify the use of AI tools, ensuring that students’ academic contributions are genuine and reflective of their own abilities.

Moreover, the limitations of AI’s ability to exercise judgment and reason highlight the necessity of human oversight. While ChatGPT can generate information and responses, it lacks the ethical and cognitive understanding that humans possess. It is essential for educators to provide guidance and supervision to ensure that AI tools are used responsibly and ethically.

| Benefits of AI in Education: | Challenges in Integrating AI: |

|---|---|

| 1. Personalized learning experiences. | 1. Ensuring academic integrity. |

| 2. Efficient administrative processes. | 2. Lack of judgment and reasoning abilities. |

| 3. Enhanced accessibility for students with disabilities. | 3. Ethical considerations in AI usage. |

By addressing these challenges, universities can harness the potential of AI while safeguarding the integrity of education. Implementing guidelines, policies, and training programs can help ensure that AI tools are used responsibly and in support of quality learning outcomes.

“The integration of AI in education brings both opportunities and challenges. While AI tools like ChatGPT can enhance efficiency and personalization, they also require careful oversight to maintain academic integrity and ethical usage. Universities should prioritize addressing the impact and limitations of AI to create a balanced and responsible learning environment.”

– Dr. Sarah Johnson, AI Education Specialist

The Need for Caution and Critical Awareness in Using AI Tools

As AI tools like ChatGPT become more prominent, it is crucial for users to exercise caution, maintain critical awareness, and implement cybersecurity measures to mitigate potential risks. While ChatGPT has been hailed as a technological breakthrough, there are concerns regarding its potential misuse by hackers and scammers. Research conducted by cybersecurity firm Kaspersky has shown that ChatGPT can be manipulated to gather advice on hacking and engage in malicious activities. This raises significant concerns about data security and the possible generation of spear phishing emails, spam emails, and even code for malware.

Despite its impressive capabilities, it is important to remember that ChatGPT lacks the ability to exercise judgment and reason like a human. This highlights the necessity for human oversight when interacting with AI tools. By having human intervention in place, we can ensure the safety and ethical use of ChatGPT, mitigating potential online threats and protecting sensitive data.

In addition to cybersecurity risks, there is also a potential threat to academic integrity. Students may be tempted to rely solely on AI tools like ChatGPT to produce academic work without engaging in critical thinking and conducting thorough research. This raises concerns about the development of original ideas and the promotion of critical analysis skills in education. Universities and educational institutions in South Africa must address and understand the effects and limitations of AI and chatbots in order to maintain the integrity of the learning process.

It is clear that the growing presence of AI tools like ChatGPT requires careful consideration and responsible usage. Implementing cybersecurity measures, promoting human oversight, and fostering critical awareness are all essential steps in mitigating risks associated with AI usage. By prioritizing the development and implementation of responsible AI practices, South Africa can ensure a secure and ethical AI future.

The Future of AI Safety and Regulation in South Africa

The future of AI safety and regulation in South Africa holds great significance in ensuring the security and protection of AI tools, including ChatGPT. As AI continues to advance and become more integrated into various aspects of society, it is crucial to establish comprehensive regulations and safety measures to mitigate potential risks and misuse.

One area of concern is the cybersecurity aspect of AI tools like ChatGPT. Cybersecurity firm Kaspersky recently demonstrated how ChatGPT can be exploited by hackers and scammers. It showcased how the tool can be used to gather advice on hacking and malicious activities, as well as generate spear phishing emails, spam emails, and even programming code for malware. This highlights the importance of implementing robust security measures to prevent the misuse of AI tools for malicious purposes.

In addition to cybersecurity, there is also a need for human oversight in interactions involving ChatGPT. While the tool has impressive capabilities, it still lacks the ability to exercise judgment and reason like a human. Human intervention is essential to ensure the safety and ethical use of ChatGPT, especially when it comes to sensitive matters such as customer support or handling personal information.

| Key Considerations for AI Safety and Regulation in South Africa |

|---|

| 1. Ethical Guidelines: Establish clear ethical guidelines for the development and use of AI tools. This includes outlining the responsibilities and accountability of developers and users regarding the potential risks and impacts associated with AI technologies. |

| 2. Comprehensive Risk Assessment: Conduct thorough risk assessments to identify potential vulnerabilities and risks associated with AI tools like ChatGPT. This will enable proactive measures to be implemented to address and mitigate these risks. |

| 3. Education and Awareness: Promote education and awareness about AI safety and regulation among the general public, organizations, and policymakers. This will ensure a better understanding of the potential risks and benefits of AI and facilitate informed decision-making. |

| 4. Collaboration and Partnerships: Foster collaboration and partnerships between government bodies, regulatory agencies, and AI researchers and developers. This will facilitate the development of effective regulations and the sharing of best practices to enhance AI safety and security. |

The future of AI safety and regulation in South Africa should prioritize the protection of AI tools like ChatGPT while balancing the potential benefits they offer. By implementing comprehensive regulations, fostering responsible usage, and promoting awareness, South Africa can harness the power of AI while ensuring the security and protection of its citizens and infrastructure.

Responsible AI development and usage play a pivotal role in addressing the safety and security concerns associated with AI tools like ChatGPT. While ChatGPT offers immense potential and opportunities, it is crucial to recognize the risks that come with its capabilities. Cybersecurity firm Kaspersky recently demonstrated how ChatGPT can be misused by hackers and scammers to gather advice on hacking techniques and engage in malicious activities.

ChatGPT has the ability to generate spear phishing emails, spam emails, and even programmable code for malware. This highlights the importance of implementing robust security measures and risk assessments when using AI tools like ChatGPT. Despite its advanced features, ChatGPT lacks the ability to exercise judgment and reason like a human, making human oversight essential to ensure safe and ethical usage.

Furthermore, responsible AI usage becomes imperative when considering the potential impact on academic integrity. Students may be tempted to rely solely on AI tools like ChatGPT to produce academic work without engaging in critical thinking and conducting thorough research. Universities and educational institutions need to address these issues, understanding the limitations and effects of AI tools on learning outcomes and academic authenticity.

By fostering responsible AI development and usage, we can mitigate the risks and promote a secure environment for utilizing AI tools like ChatGPT. It is essential to exercise caution, implement cybersecurity measures, and maintain critical awareness of the potential dangers associated with AI. Through responsible practices, we can harness the power of AI while safeguarding against potential threats to privacy, security, and academic integrity.

In conclusion, while ChatGPT offers many benefits, its potential risks, such as being exploited for malicious activities, require careful consideration and proactive measures to ensure its safe and responsible usage. Cybersecurity firm Kaspersky has demonstrated how ChatGPT can be used to gather advice on hacking and engage in activities like spear phishing, generating spam emails, and creating malware. These capabilities pose a significant cybersecurity threat, particularly in South Africa where online threats and cybercrime are prevalent.

Despite its advanced capabilities, ChatGPT still lacks the ability to exercise judgment and reason like a human. Human oversight becomes crucial when using this AI tool to ensure its ethical and safe usage. The limitations of AI tools like ChatGPT highlight the importance of understanding the risks and implementing proper cybersecurity measures to protect against potential online threats.

Furthermore, there is a growing concern over the impact of AI, including ChatGPT, on academic integrity. Students may exploit AI tools to produce academic work without engaging in critical thinking and research, compromising the quality and credibility of their education. Universities must address and understand the effects and limitations of AI and chatbots in education, ensuring that students are equipped with the necessary critical thinking skills and knowledge.

In South Africa, as AI tools like ChatGPT become more prevalent, there is a need for caution and critical awareness in their usage. It is essential for individuals, organizations, and educational institutions to comprehend the potential risks and implement cybersecurity measures to protect against malicious activities. Responsible AI development, usage, and regulation are crucial in ensuring the safety, security, and ethical use of AI tools like ChatGPT in South Africa’s evolving technological landscape.

FAQ

Is ChatGPT considered malware?

No, ChatGPT is not considered malware itself. It is a generative AI tool. However, there are potential risks and concerns associated with its use that need to be considered.

What are the risks associated with using ChatGPT?

The risks associated with using ChatGPT include its potential misuse by hackers and scammers, who can use it to generate spear phishing emails, spam emails, and programming code for malware. It also lacks the ability to exercise judgment and reason, making human oversight essential.

Can ChatGPT be used for academic dishonesty?

Yes, there is a potential threat to academic integrity as students may use AI tools like ChatGPT to produce academic work without engaging in critical thinking and research.

How can universities address the impact of AI tools like ChatGPT in education?

Universities need to understand the effects and limitations of AI and chatbots in education. This includes implementing measures to promote critical thinking, research skills, and ethical AI usage.

What should users be cautious of when using AI tools like ChatGPT?

Users should exercise caution and critical awareness when using AI tools like ChatGPT. It is important to understand the potential risks, implement cybersecurity measures, and foster responsible AI usage.

Source Links

- https://www.derebus.org.za/chatgpt-exploring-the-risks-of-unregulated-ai-in-south-africa/

- https://www.moneyweb.co.za/news/ai/is-the-chatgpt-app-a-gift-to-hackers-and-scammers/

- http://www.news.uct.ac.za/features/teachingandlearning/-article/2023-06-20-ai-chatbots-and-chatgpt-threat-to-knowledge-work-or-a-dancing-bear