Overview

Introduction to ChatGPT

ChatGPT is an advanced language model developed by OpenAI. It is designed to generate human-like text responses based on given prompts. The model has been trained on a vast amount of data from the internet, allowing it to produce coherent and contextually relevant responses. However, while ChatGPT has shown impressive capabilities, it also raises ethical considerations that need to be addressed. This article explores the dangers associated with using ChatGPT and suggests safeguards to mitigate potential risks.

Benefits of using ChatGPT

ChatGPT offers numerous benefits for various applications. It provides a quick and efficient way to generate text, making it useful for tasks such as content creation, brainstorming, and generating ideas. Additionally, ChatGPT can be a valuable tool for language learning, as it can generate example sentences and provide explanations. Moreover, ChatGPT can assist in customer support by providing automated responses to common queries. Overall, ChatGPT has the potential to enhance productivity and creativity in various domains.

Ethical concerns with ChatGPT

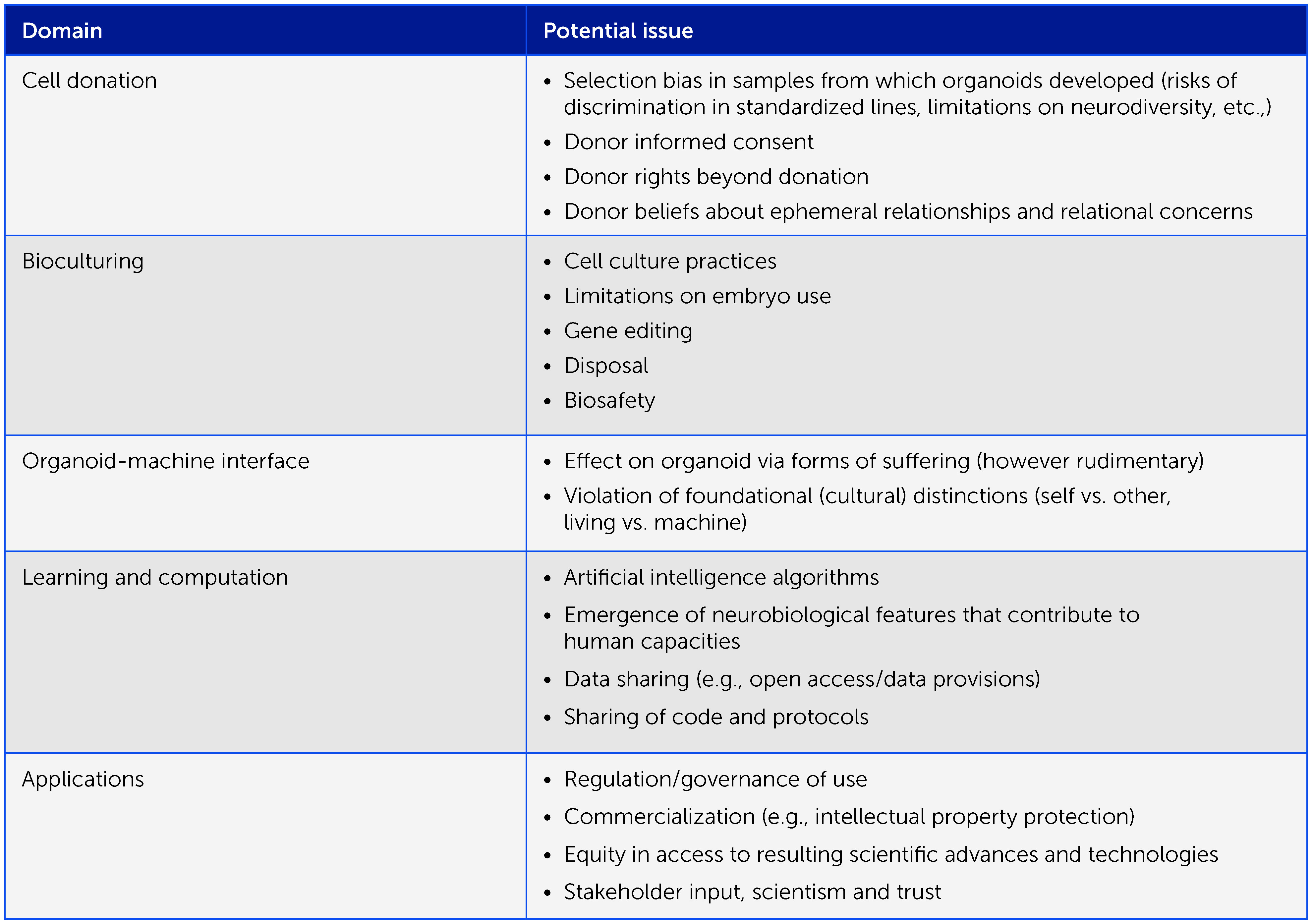

ChatGPT, like any AI language model, raises important ethical considerations that need to be addressed. One of the primary concerns is the potential for bias in the responses generated by the model. As ChatGPT learns from a vast amount of text data, it can inadvertently replicate and amplify existing biases present in the training data. This can result in discriminatory or offensive responses. Another ethical concern is the issue of misinformation. ChatGPT may generate responses that are factually incorrect or misleading, which can have serious consequences in areas such as healthcare or legal advice. Additionally, there is a concern about privacy. ChatGPT interacts with users and collects data during conversations, raising questions about data security and how this information is used. It is crucial to address these ethical concerns and implement safeguards to ensure responsible and beneficial use of ChatGPT.

Data Privacy and Security

Collection and storage of user data

When using ChatGPT, it is important to consider the ethical implications of the collection and storage of user data. OpenAI takes user privacy seriously and has implemented safeguards to protect sensitive information. Data collected during interactions with ChatGPT is used to improve the model’s performance and is stored securely. However, it is crucial for organizations and developers to be transparent about their data practices and obtain user consent. By being mindful of these ethical considerations, we can ensure that the use of ChatGPT is responsible and respects user privacy.

Protecting user privacy

When using ChatGPT, it is crucial to consider the ethical implications and protect user privacy. As an AI language model, ChatGPT has the potential to generate human-like text that may contain sensitive information. To safeguard user privacy, organizations should implement robust security measures, such as data encryption and access controls. Additionally, clear guidelines should be established to ensure that user data is handled responsibly and is not shared without explicit consent. Regular audits and monitoring can help identify and address any potential privacy breaches. By prioritizing user privacy, we can mitigate the risks associated with using ChatGPT and build trust with users.

Ensuring data security

When using ChatGPT, it is crucial to safeguard your company’s innovation and protect sensitive data. There are several measures that can be taken to ensure data security. First, it is important to implement strong access controls and authentication mechanisms to prevent unauthorized access to the system. Additionally, regular security audits and vulnerability assessments should be conducted to identify and address any potential weaknesses. Encryption should be used to protect data both at rest and in transit. Furthermore, data backups should be regularly performed to prevent data loss in case of any unforeseen events. Lastly, staff training and awareness programs should be implemented to educate employees about best practices for data security.

Bias and Fairness

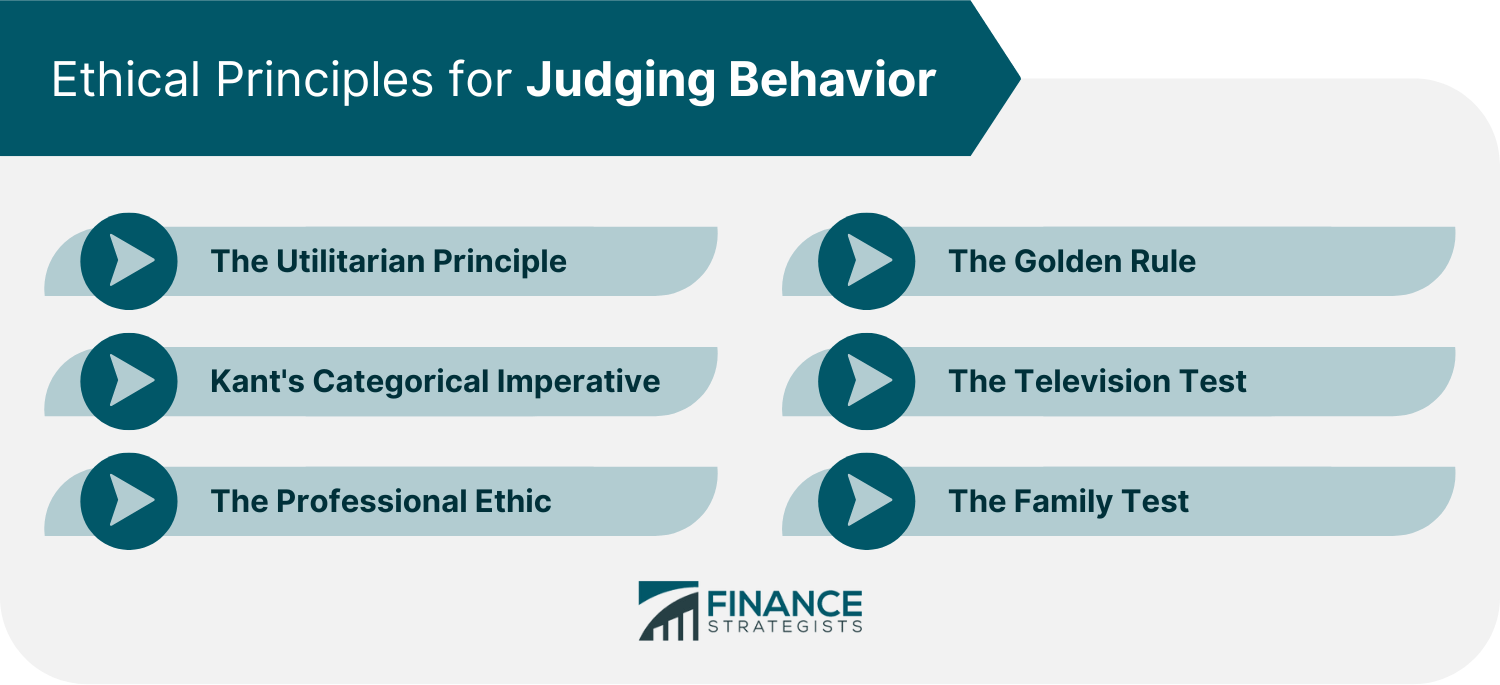

Identifying and mitigating bias

When using ChatGPT, it is crucial to adopt a transformative approach to identify and mitigate bias. This involves implementing safeguards and ethical considerations throughout the development and deployment process. One effective strategy is to create diverse and inclusive training datasets that represent a wide range of perspectives and experiences. Additionally, regular audits and evaluations should be conducted to detect and address any potential biases that may arise. It is also important to involve a diverse group of stakeholders in the decision-making process to ensure that different perspectives are taken into account. By taking these measures, we can minimize the risks of bias and promote fairness and inclusivity in the use of ChatGPT.

Ensuring fairness in responses

To ensure fairness in responses, it is crucial to consider the ethical implications of ChatGPT. As we delve into the possibilities offered by this technology, we must be cautious in exploring the realm of malicious generative AI. This involves understanding the potential dangers and implementing safeguards to prevent harm. One important aspect is to establish clear guidelines and rules for the AI system, defining what is acceptable and what is not. Additionally, continuous monitoring and auditing of the system’s outputs can help identify and address any biases or harmful content that may arise. By proactively addressing these ethical considerations, we can strive for a more responsible and inclusive use of ChatGPT.

Addressing discriminatory language

To ensure the responsible use of ChatGPT, it is crucial to address the issue of discriminatory language. Discriminatory language can be harmful and perpetuate biases and stereotypes. Understanding the potential dangers that discriminatory language poses is the first step towards mitigating its impact. One way to address this issue is by implementing safeguards such as pre-training the model on diverse and inclusive datasets. Additionally, ongoing monitoring and evaluation can help identify and rectify any instances of biased or discriminatory responses. By taking these measures, we can promote a more inclusive and ethical use of ChatGPT.

Accountability and Transparency

Responsibility of developers

Developers who create and deploy ChatGPT have a crucial role in ensuring its ethical use. They must consider the potential impact of the AI system on society and take steps to mitigate any harm it may cause. This responsibility includes implementing safeguards and guidelines that promote fairness, transparency, and accountability. Developers should also actively engage with the wider community to gather feedback and address concerns. By prioritizing ethical considerations, developers can contribute to the responsible development and deployment of AI technologies.

Providing explanations for decisions

When using ChatGPT, it is crucial to provide explanations for the decisions it makes. This is especially important in sensitive contexts such as South Africa where ethical considerations are paramount. By offering clear justifications for the AI’s outputs, users can understand the reasoning behind the system’s choices. Additionally, transparency can help mitigate potential biases and errors that may arise. Providing explanations fosters trust and accountability, enabling users to assess the AI’s reliability and make informed decisions based on the generated content.

Transparency in model limitations

When using ChatGPT, it is important to consider the limitations of the model to ensure ethical use. One of the key limitations is the accuracy and reliability of natural language processing. While ChatGPT has made significant advancements in understanding and generating human-like text, it is still prone to errors and biases. It is crucial to be aware of these limitations and take appropriate measures to mitigate any potential risks. This can include providing clear disclaimers to users about the capabilities and limitations of the model, as well as implementing robust review processes to identify and address any biases or inaccuracies that may arise.

Conclusion

Summary of ethical considerations

When using ChatGPT, there are several ethical considerations that should be taken into account. It is important to recognize the potential dangers and implement safeguards to mitigate any negative impacts. One of the main concerns is the potential for bias in the model’s responses, which can perpetuate harmful stereotypes or misinformation. Another consideration is the privacy of user data, as ChatGPT interacts with sensitive information. To address these concerns, transparency is crucial, ensuring that users are aware they are interacting with an AI system. Additionally, human oversight is essential to review and moderate the system’s output. By following these simple steps, we can ensure the responsible use of ChatGPT and minimize any ethical risks.

Importance of ongoing evaluation

Ongoing evaluation is crucial when using ChatGPT to ensure its reliability and ethical use. It is important to regularly assess the reliability of the model’s responses by monitoring its performance and identifying any biases or inaccuracies. This can be done through continuous testing and feedback from users. Additionally, it is essential to establish safeguards to mitigate potential risks and ensure responsible deployment of ChatGPT. These safeguards may include implementing human-in-the-loop systems, providing clear guidelines to human reviewers, and regularly updating and improving the model based on user feedback.

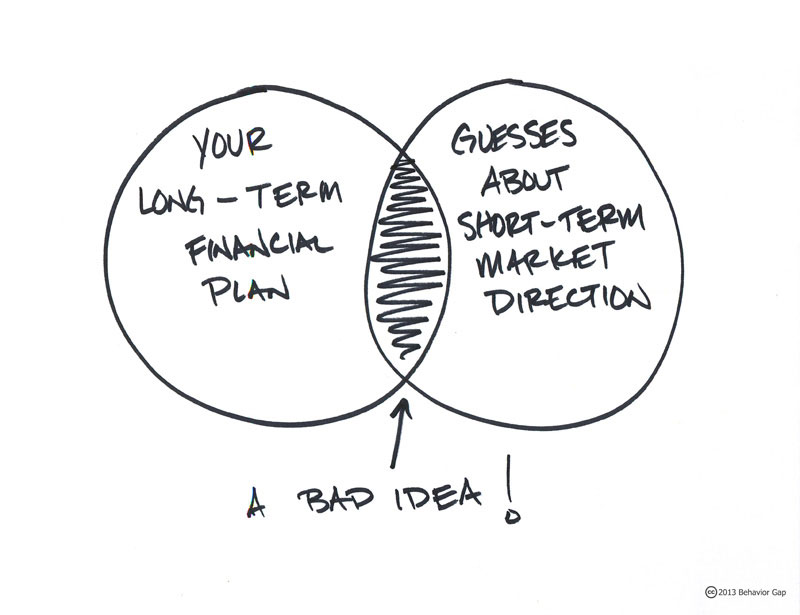

Balancing benefits and risks

When considering the use of ChatGPT, it is crucial to strike a balance between the benefits it offers as a transformative solution and the potential risks it poses. On one hand, ChatGPT has the potential to revolutionize various industries by providing efficient and personalized services. However, it is important to be aware of the ethical implications associated with its use. The risks include the potential for biased or discriminatory responses, the spread of misinformation, and the manipulation of individuals through persuasive techniques. To mitigate these risks, safeguards such as rigorous testing, ongoing monitoring, and user education must be implemented.