Generative AI, including GPT AI, has seen significant advancements in recent years and is predicted to be widely used by enterprises in the near future. In this article, we will explore the basics of GPT AI, its key components, and how it works. We will also discuss its applications in natural language processing, content generation, chatbots and virtual assistants, and language translation. Additionally, we will examine the benefits and limitations of GPT AI, as well as the ethical considerations surrounding its use.

Key Takeaways

- Generative AI, including GPT AI, has revolutionized the field of artificial intelligence.

- GPT AI is a foundation model built using neural network techniques and trained on large datasets.

- GPT AI has applications in natural language processing, content generation, chatbots and virtual assistants, and language translation.

- The benefits of GPT AI include its ability to generate new content, answer unusual queries, and extract key points from large volumes of data.

- However, GPT AI also has limitations, such as potential bias, privacy concerns, and the risk of misinformation and manipulation.

What is GPT AI?

The basics of GPT AI

GPT AI, which stands for Generative Pre-trained Transformer AI, is a powerful language model developed by OpenAI. It is built using neural network techniques and trained on large datasets to perform a wide range of downstream tasks. GPT AI has revolutionized the field of natural language processing and content generation.

One of the key components of GPT AI is its foundation model, GPT-1. This model serves as the basis for subsequent versions like GPT-3 and GPT-4. The foundation model is trained on extensive data and can generate new content based on the patterns it has learned.

GPT AI has been constantly evolving and improving since its inception in 2018. OpenAI has been dedicated to training and refining its foundation models to enhance their accuracy and capabilities. The advancements in GPT AI have paved the way for the development of various GenAI tools that are widely used for personal and business purposes.

Some of the most recent developments in the market include OpenAI’s GPT-4 and Google’s Gemini Models. These models further expand the generative AI capabilities and offer new possibilities for content generation.

How GPT AI works

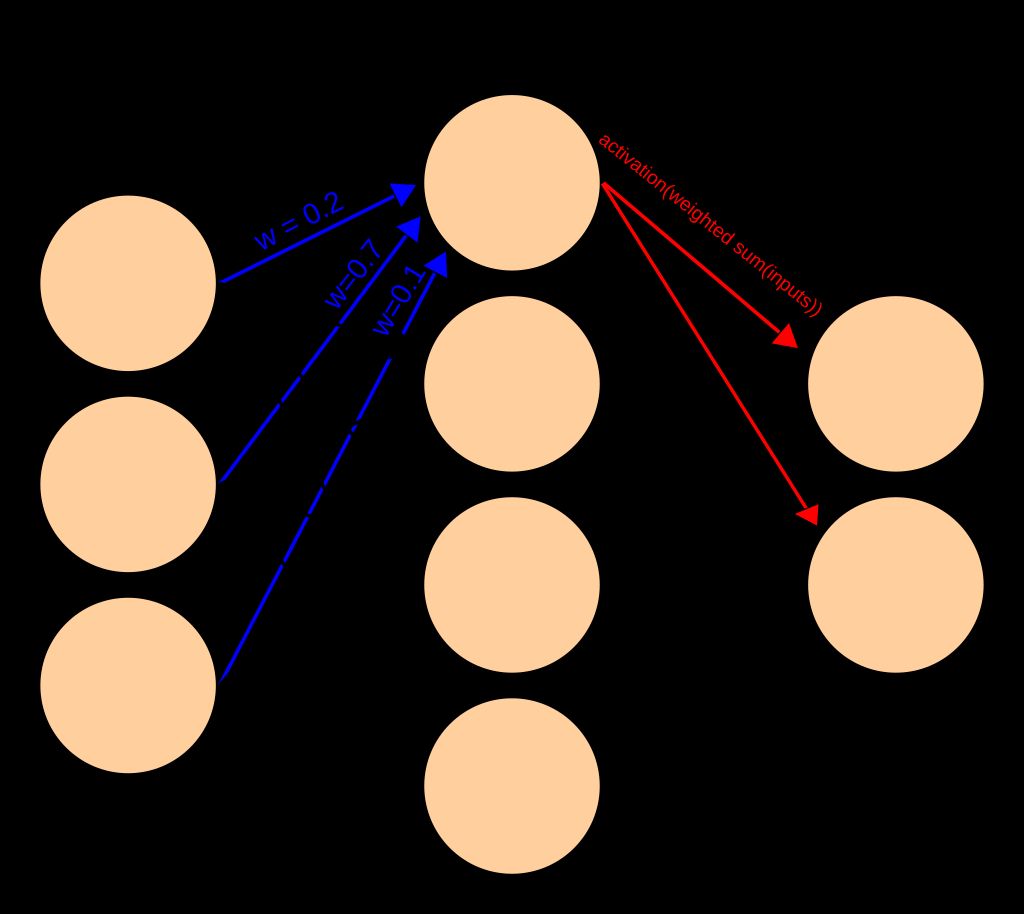

GPT AI, or Generative Pre-trained Transformer AI, is a powerful language model that uses deep learning techniques to generate human-like text. It is based on the Transformer architecture, which allows it to process and understand large amounts of text data. GPT AI works by pre-training the model on a vast corpus of text from the internet, which helps it learn grammar, context, and various language patterns. During the pre-training phase, the model predicts the next word in a sentence based on the previous words, which helps it capture the relationships between words and generate coherent text. Once the model is pre-trained, it can be fine-tuned on specific tasks, such as text completion, summarization, or translation, to make it more accurate and useful for different applications.

Key components of GPT AI

GPT AI is built using various key components that contribute to its functionality and effectiveness. These components include transformers, pre-training, fine-tuning, attention mechanism, and decoding.

Applications of GPT AI

Natural language processing

Natural Language Processing (NLP) is a branch of artificial intelligence that focuses on the interaction between computers and human language. It involves the ability of a computer system to understand, interpret, and generate human language in a way that is meaningful and useful. NLP techniques enable computers to analyze and extract information from large volumes of text data, making it possible to automate tasks such as sentiment analysis, language translation, and text summarization. NLP has a wide range of applications across various industries, including customer service, healthcare, and finance.

Content generation

Content generation is one of the key applications of GPT AI. With its revolutionary technology, GPT AI has the capability of generating new and original content based on the input provided. It relies on neural network techniques such as transformers, GANs, and VAEs. These techniques, inspired by the human brain, teach computers to process data in a way that mimics human thinking. Some of the popular techniques used in GPT AI include GANs developed in 2014 and Transformers introduced in 2017. GPT AI has been widely used for various content generation tasks, including text, images, and even music.

Chatbots and virtual assistants

Chatbots and virtual assistants are two popular applications of GPT AI. Chatbots are computer programs designed to simulate human conversation, providing automated responses to user queries. They can be used for customer support, information retrieval, and even entertainment. Virtual assistants, on the other hand, are AI-powered software that can perform various tasks and provide personalized assistance to users. They can schedule appointments, set reminders, answer questions, and more. Both chatbots and virtual assistants rely on GPT AI to understand and generate human-like responses.

Language translation

Language translation is one of the key applications of GPT AI. With the advancements in natural language processing, GPT AI has the ability to accurately translate text from one language to another. This technology has greatly improved communication and accessibility across different cultures and languages. Whether it’s translating documents, websites, or conversations, GPT AI can handle the complexity of language translation with ease.

Benefits and limitations of GPT AI

Advantages of GPT AI

GPT AI offers several advantages over traditional AI models:

- Superior output: GPT AI models are trained on larger datasets, allowing them to generate detailed and high-quality output.

- Wide range of applications: GPT AI can be used in fields such as research, analytics, and customer support, thanks to its capability to extract and summarize key points from large volumes of data.

- Unusual query handling: Unlike conventional chatbots, GPT AI can easily answer unusual queries from customers.

- Synthetic data generation: GPT AI can be useful for generating synthetic data while ensuring data integrity.

In comparison to traditional AI models, GPT AI’s computational requirements and training on large datasets are primary factors that differentiate it and contribute to its superior performance.

Challenges and limitations of GPT AI

While GPT AI has shown great potential in various applications, there are several challenges and limitations that need to be addressed. One of the main challenges is ensuring the quality of input data, as the output of GPT AI is directly influenced by the data it is trained on. Additionally, there are concerns about the potential misuse of GPT AI technology, such as the creation of deepfakes and cybercrime. The lack of well-defined regulations for the protection of sensitive and private data is also a limitation that needs to be addressed. It is important to carefully consider the ethical implications of using GPT AI and to implement responsible AI practices.

Ethical considerations of GPT AI

Bias and fairness

When it comes to GPT AI, one important consideration is the potential for bias and fairness issues. GPT AI models are trained on large datasets, and if these datasets contain biased or unfair information, the AI system may learn and reproduce those biases. This can lead to biased outputs and discriminatory behavior.

To address this, it is crucial to carefully curate and review the training data to ensure it is diverse, representative, and free from biases. Additionally, ongoing monitoring and evaluation of the AI system’s outputs are necessary to identify and mitigate any biases that may arise.

Table:

| Challenges of Bias in GPT AI |

|---|

| Reproducing biased information |

| Discriminatory behavior |

List:

- Curate diverse and representative training data

- Monitor and evaluate AI system outputs

- Mitigate biases that may arise

It is essential to prioritize fairness and inclusivity in the development and deployment of GPT AI systems.

Privacy concerns

Privacy concerns are a significant consideration when it comes to the use of GPT AI. As GPT AI relies on a large corpus of data for training, it is crucial to ensure that the dataset used is free from Not Safe for Work (NSFW) and private content. The potential for the misuse of GPT AI technology, such as for deepfakes and cybercrime, is a real concern. Additionally, the laws governing the protection of sensitive and private data are not well defined, highlighting the need for clear regulations to guide the adoption of GPT AI at a global level. Organizations must take significant efforts to collect high-quality input data to minimize the risk of biased outputs and ensure responsible use of GPT AI.

Misinformation and manipulation

Misinformation and manipulation are significant concerns when it comes to the use of GPT AI. Since GPT AI is trained on a large corpus of data, it is crucial to ensure that the dataset is free from Not Safe for Work (NSFW) and private content. The potential for using GPT AI for unfair practices, such as deepfakes and cybercrime, is very high. The laws governing the protection of sensitive and private data are not well defined, highlighting the need for regulations to guide the responsible adoption of this technology at a global level.

There is a direct relationship between the quality of input data and the output of GPT AI. Therefore, it is essential to make significant efforts to collect high-quality data in order to eliminate hallucinations and biased outputs. Operating responsibly is crucial when utilizing GPT AI to mitigate the risks associated with misinformation and manipulation.

Stay tuned for our next article on how to adopt Generative AI and identify use cases for your organization! References: Link to article

Ethical considerations of GPT AI

Conclusion

In conclusion, Generative AI, particularly the GPT models, has emerged as a powerful technology that has the potential to revolutionize various industries. With its ability to generate new content, understand and respond to human-like tasks, and adapt to a wide range of downstream tasks, Generative AI is poised to shape the future of AI. As more enterprises adopt Generative AI, we can expect to see advancements in research, analytics, customer service, and data generation. It is clear that Generative AI is not just another buzzword, but a technology that is here to stay.

Frequently Asked Questions

What is GPT AI?

GPT AI, or Generative Pre-trained Transformer AI, is an artificial intelligence model developed by OpenAI. It is trained on a large dataset and can generate human-like text based on the input it receives.

How does GPT AI work?

GPT AI works by using a transformer architecture, which allows it to process and generate text. It uses self-attention mechanisms to understand the context of the input and generate coherent and relevant output.

What are the key components of GPT AI?

The key components of GPT AI include the transformer architecture, which enables the model to process and generate text, and the pre-training and fine-tuning process, which involves training the model on a large dataset and then fine-tuning it for specific tasks.

What are the applications of GPT AI?

GPT AI has various applications, including natural language processing, content generation, chatbots and virtual assistants, and language translation. It can be used in industries such as marketing, customer service, and content creation.

What are the benefits of GPT AI?

Some of the benefits of GPT AI include its ability to generate human-like text, its versatility in various tasks, and its potential to automate and streamline processes in different industries.

What are the limitations of GPT AI?

GPT AI has some limitations, such as the potential for biased or inaccurate outputs, the need for large amounts of training data, and the lack of real-time interaction capabilities.