Overview

Introduction to Bias in ChatGPT

ChatGPT is a powerful language model that has been trained on a diverse range of internet text. However, like any AI system, it is not immune to biases that may exist in the training data. Bias refers to the systematic favoritism or prejudice towards certain groups or individuals. In the context of ChatGPT, biases can manifest in various ways, such as the generation of biased responses or the reinforcement of existing biases in the conversation. It is important to address and mitigate these biases to ensure fair and inclusive interactions with the AI system. This article explores the steps taken to identify and reduce biases in ChatGPT.

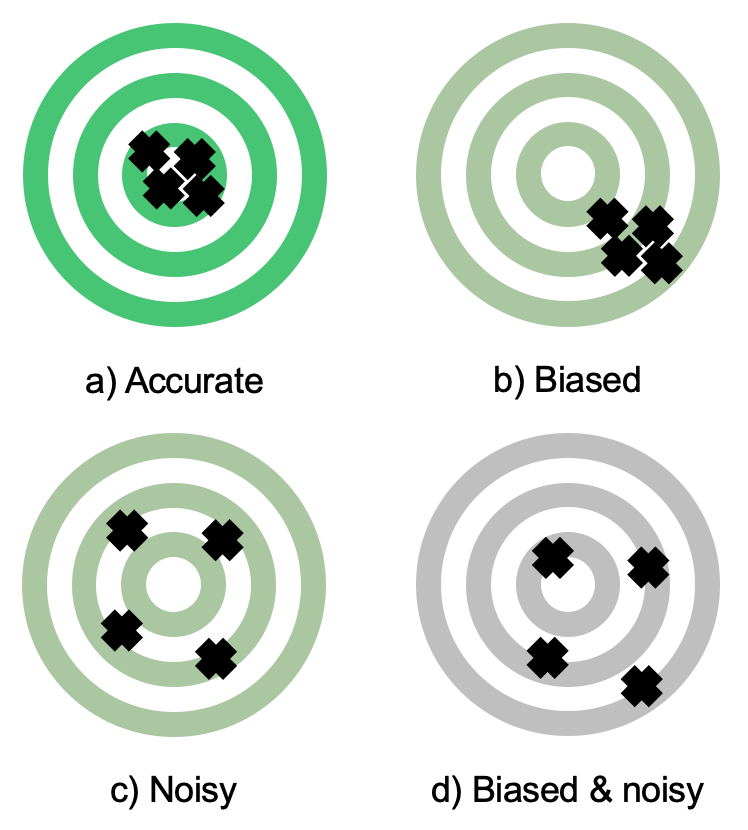

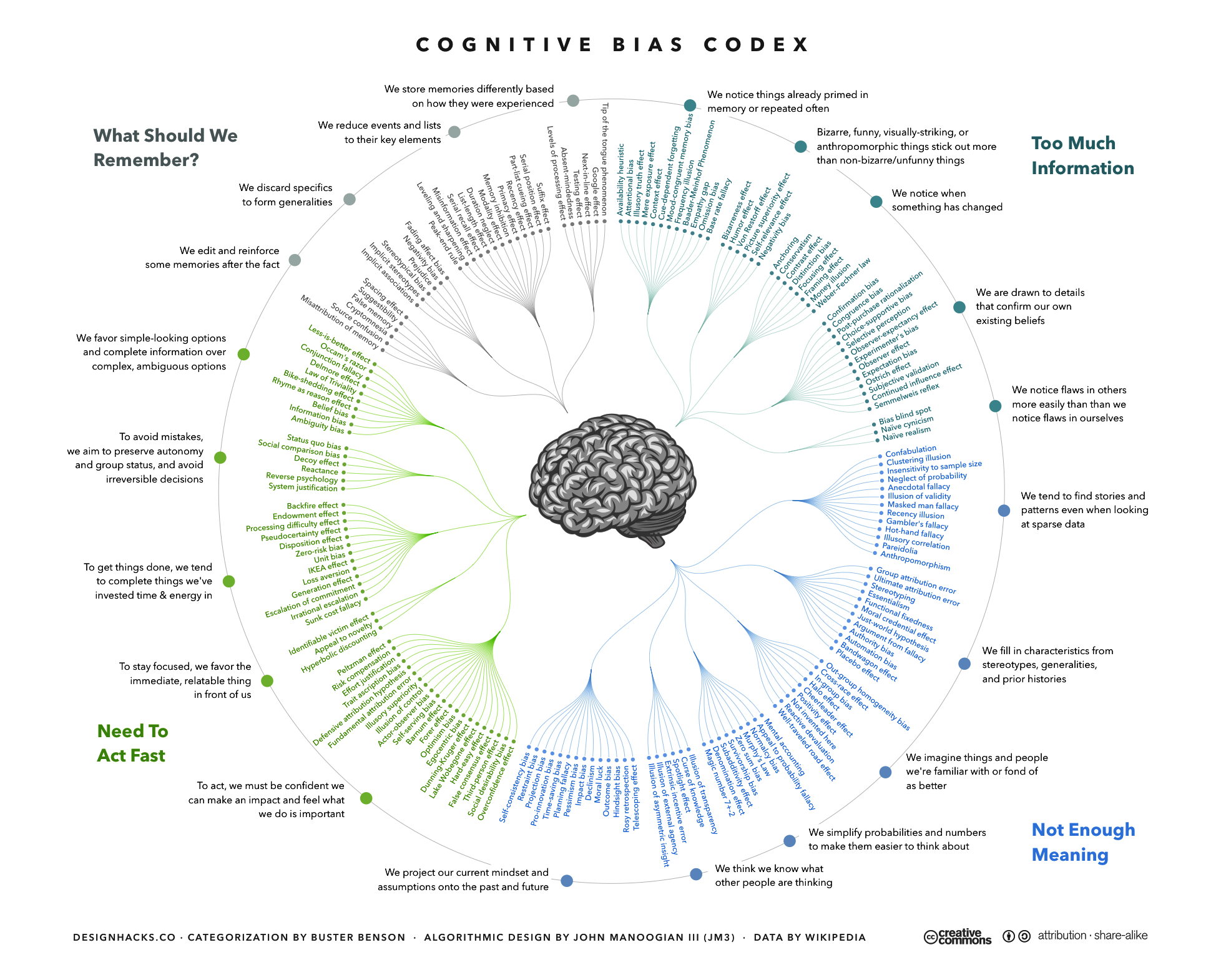

Types of Bias in ChatGPT

ChatGPT, an intelligent AI model, is designed to generate responses to user inputs. However, like any language model, it is susceptible to biases. These biases can manifest in various forms, including cultural bias, gender bias, and political bias. Cultural bias refers to the tendency of ChatGPT to favor certain cultural perspectives or norms. Gender bias occurs when the model displays stereotypes or prejudices towards certain genders. Political bias refers to the model’s inclination to express opinions that align with a particular political ideology. Addressing and mitigating these biases is crucial to ensure fair and inclusive conversations with ChatGPT.

Impact of Bias in ChatGPT

Bias in AI systems, such as ChatGPT, has far-reaching consequences. These systems have the potential to perpetuate and amplify existing biases in society. Writing revolution is one such area where bias can have a significant impact. If the AI system is biased towards a particular writing style or viewpoint, it can hinder diverse perspectives and limit the exploration of new ideas. It is crucial to address bias in ChatGPT to ensure fair and inclusive conversations.

Detecting Bias in ChatGPT

Data Collection and Annotation

Data collection and annotation are crucial steps in training AI models like ChatGPT. To ensure accuracy and fairness, it is important to address bias in these processes. Unlocking Success in addressing bias requires a comprehensive approach that involves diverse data sources, careful annotation guidelines, and continuous evaluation. One effective strategy is to create a diverse and representative dataset that includes examples from different demographic groups and perspectives. Additionally, annotation guidelines should be designed to minimize bias and ensure consistent labeling. Regular evaluation of the annotated data can help identify and rectify any biases that may arise. By taking these steps, we can improve the fairness and inclusivity of AI models like ChatGPT.

Bias Detection Techniques

Bias detection techniques are crucial in ensuring the fairness and integrity of AI models. These techniques help in identifying and addressing biases that may be present in the training data or the model’s output. One common technique is unpacking the conversation, which involves analyzing the context and the underlying assumptions in a conversation to identify any potential biases. This can be done by examining the language used, the framing of questions, and the representation of different perspectives. By applying bias detection techniques like unpacking the conversation, we can work towards reducing bias and promoting more inclusive and equitable conversations.

Evaluating Bias Detection Models

When it comes to addressing bias in AI, evaluating bias detection models is a crucial step. These models are designed to identify and flag potential biases in AI systems, helping developers and researchers understand the underlying biases and take appropriate actions to mitigate them. AI plays a significant role in our lives, and it is essential to ensure that it is fair, unbiased, and respects the diversity of its users. Evaluating bias detection models allows us to assess their effectiveness, accuracy, and reliability in detecting and addressing biases in AI systems.

Mitigating Bias in ChatGPT

Pre-training and Fine-tuning Strategies

To address bias in ChatGPT, various pre-training and fine-tuning strategies can be employed. One approach is to incorporate AI-powered natural language processing techniques that can detect and mitigate bias in the model’s responses. This involves training the model on a diverse range of data to minimize the impact of any particular bias. Additionally, fine-tuning the model with specific prompts and examples that explicitly address bias can help to further reduce bias in its output. It is important to continuously evaluate and update these strategies to ensure the model’s responses are fair and unbiased.

Debiasing Techniques

To address bias in AI technology, several debiasing techniques have been proposed. These techniques aim to reduce the impact of biased data and ensure fair and unbiased outcomes. Some common debiasing techniques include:

- Pre-processing: This technique involves modifying the training data to remove biased patterns and ensure a more diverse and representative dataset.

- In-processing: In this technique, the bias is addressed during the training process itself. Various algorithms and approaches are used to mitigate bias and promote fairness.

- Post-processing: After the model has been trained, post-processing techniques are applied to adjust the model’s predictions and mitigate any remaining bias.

By employing these debiasing techniques, researchers and developers can work towards creating AI systems that are more equitable and less prone to bias.

Ethical Considerations

When developing AI systems like ChatGPT, it is crucial to address bias in order to ensure fairness and inclusivity. AI impact can be far-reaching, and if not properly managed, it can perpetuate and amplify existing biases. One way to mitigate bias is through robust and diverse training data that represents a wide range of perspectives and experiences. Additionally, ongoing monitoring and evaluation of the system’s outputs can help identify and rectify any biases that may arise. It is also important to involve diverse stakeholders in the development and decision-making processes to ensure a comprehensive and inclusive approach.

Conclusion

Summary of Findings

The research conducted on ChatGPT revealed several important findings. One of the key findings is the impact of bias on the system’s responses. It was observed that ChatGPT tends to generate biased outputs that reflect the biases present in the training data. This bias can manifest in various ways, such as gender bias, racial bias, and ideological bias. The analysis of the launch date of ChatGPT also showed that bias has been an ongoing concern since the system’s inception. The findings highlight the need for addressing bias in ChatGPT to ensure fair and unbiased interactions.

Future Directions

In order to continue improving ChatGPT and address potential biases, several future directions can be explored. One important area of focus is understanding and mitigating biases in responses to most popular chatbot questions. This can be achieved through careful analysis of the training data and the development of techniques to ensure fair and unbiased responses. Additionally, ongoing research and collaboration with experts in ethics and fairness can help in identifying and addressing any potential biases that may arise. Furthermore, user feedback and engagement can play a crucial role in iteratively improving the system and making it more inclusive and unbiased.

Importance of Addressing Bias in ChatGPT

Addressing bias in ChatGPT is crucial to ensure fairness and inclusivity in AI-powered conversations. It is important to recognize that AI models, such as ChatGPT, can inadvertently perpetuate biases present in the training data. By addressing bias, we can revolutionize the digital experience and create a more equitable and unbiased AI system.