The Evolution of ChatGPT

From GPT-2 to ChatGPT

The development of ChatGPT marks a significant milestone in the evolution of AI-powered assistants. Building upon the success of GPT-2, ChatGPT introduces several improvements in language understanding and contextual understanding. It leverages advanced transformer models and attention mechanisms to enhance its capabilities. This transformative power of AI is not limited to the automotive industry, but it extends to various other domains as well. With its ability to handle ambiguity and uncertainty, ChatGPT is poised to revolutionize the way we interact with AI assistants.

Improvements in Language Understanding

With the development of ChatGPT, significant improvements have been made in language understanding. The model has been trained on a vast amount of data, allowing it to grasp a wide range of topics and respond more accurately to user queries. Additionally, the introduction of advanced attention mechanisms and transformers has enhanced the model’s ability to capture contextual information and generate coherent and meaningful responses. These improvements have made ChatGPT a more powerful and versatile AI assistant.

Enhancing Contextual Understanding

To further enhance the contextual understanding of ChatGPT, several techniques have been implemented. One such technique is the use of transformers which revolutionize the way the model processes and understands language. Transformers allow the model to capture long-range dependencies and contextual information more effectively. Additionally, the model incorporates attention mechanisms that allow it to focus on relevant parts of the input. This enables ChatGPT to generate more coherent and contextually relevant responses. Another important aspect is the handling of ambiguity and uncertainty. ChatGPT is designed to identify and address ambiguous queries by asking clarifying questions or providing multiple possible interpretations. This ensures that the model can provide accurate and meaningful responses even in complex and uncertain situations.

Training and Fine-Tuning ChatGPT

Data Collection and Preprocessing

Data collection and preprocessing are crucial steps in training ChatGPT. The AI model requires a large amount of diverse and high-quality data to learn from. The data collection process involves gathering conversations from various sources, including online forums, social media platforms, and customer support chats. Once the data is collected, it undergoes preprocessing to remove irrelevant information, clean up noise, and anonymize personal data. Additionally, the data is labeled to provide context and facilitate training. This labeling process involves categorizing conversations, identifying intents, and tagging important entities. The resulting dataset serves as the foundation for training the language model.

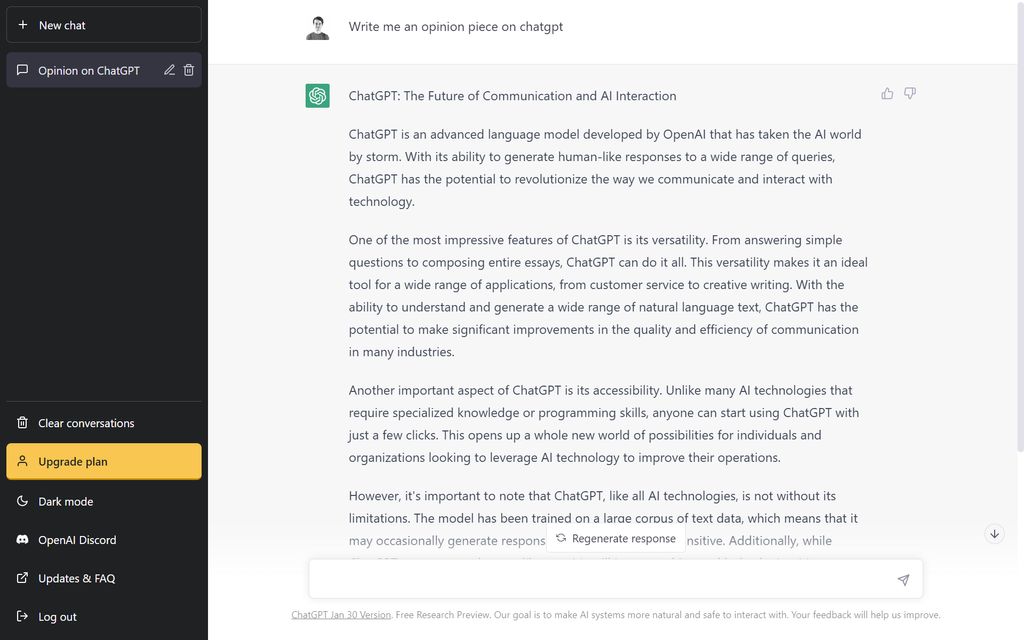

Training the Language Model

During the training process, the language model is exposed to a vast amount of text data to learn patterns and relationships. This data includes a wide range of natural language sources such as books, articles, and websites. The model is trained to predict the next word in a sentence based on the context of the previous words. This process involves analyzing the statistical patterns in the data and adjusting the model’s internal parameters through a process called backpropagation. The training phase requires significant computational resources and time to achieve optimal performance. Once the language model is trained, it can generate coherent and contextually relevant responses based on the input it receives.

Fine-Tuning for Specific Use Cases

After the initial training, ChatGPT goes through a fine-tuning process to specialize its capabilities for specific use cases. This involves training the model on domain-specific data and optimizing it for desired behaviors. For example, in the finance domain, ChatGPT can be fine-tuned to provide accurate financial advice and answer queries related to investments, budgeting, and market trends. Fine-tuning allows ChatGPT to understand and generate contextually relevant responses in specific areas.

The Inner Workings of ChatGPT

Architecture and Model Components

The architecture of ChatGPT consists of multiple model components that work together to generate responses. One of the key components is the transformer model, which enables the model to capture long-range dependencies and contextual information. The transformer uses self-attention mechanisms to weigh the importance of different words in a sentence. This allows ChatGPT to handle ambiguity and uncertainty in user inputs. Additionally, ChatGPT incorporates a knowledge base that provides access to factual information. This knowledge base is continuously updated to ensure accurate and up-to-date responses. Regulation of AI is an important topic in the development of AI-powered assistants, as it involves ensuring ethical and responsible use of the technology.

Attention Mechanisms and Transformers

Attention mechanisms and transformers are key components of ChatGPT’s architecture. The attention mechanism allows the model to focus on different parts of the input sequence when generating the output. Transformers, on the other hand, enable the model to capture long-range dependencies and contextual information. These components play a crucial role in improving the language understanding and contextual understanding capabilities of ChatGPT.

Handling Ambiguity and Uncertainty

Handling ambiguity and uncertainty is a crucial aspect of ChatGPT’s functionality. With its advanced attention mechanisms and transformers, ChatGPT is able to navigate through ambiguous queries and provide meaningful responses. The model’s ability to understand context and make informed predictions allows it to handle uncertainty with confidence. However, it is important to note that ChatGPT is not infallible and may occasionally produce incorrect or nonsensical answers. To mitigate this, fine-tuning the model for specific use cases and continuous improvement in training data are essential. Despite its limitations, ChatGPT remains a powerful tool that can assist users in a wide range of tasks.

Ethical Considerations and Challenges

Bias and Fairness

Bias and fairness are important considerations in the development of AI-powered assistants like ChatGPT. It is crucial to ensure that the system does not favor any particular group or exhibit discriminatory behavior. Ethical guidelines are followed during the training and fine-tuning process to minimize bias. Additionally, ongoing monitoring and evaluation are conducted to identify and address any potential biases that may arise. Data privacy is also a key concern, and measures are taken to protect user information and maintain confidentiality. The development of AI systems should prioritize fairness, inclusivity, and transparency to build trust with users.

Privacy and Data Security

When it comes to privacy and data security, ChatGPT takes several measures to ensure user information is protected. The model is designed to prioritize user privacy by not storing any personal data. Additionally, data encryption techniques are used to safeguard information during transmission. ChatGPT also implements strict access controls and authentication mechanisms to prevent unauthorized access to user data. To further enhance security, regular audits and vulnerability assessments are conducted to identify and address any potential vulnerabilities. Overall, ChatGPT is committed to maintaining a high level of privacy and data security throughout its operation.

Mitigating Harmful Behaviors

One of the key challenges in developing AI-powered assistants like ChatGPT is mitigating harmful behaviors. These assistants have the potential to be used by individuals and businesses for various purposes, and it is important to ensure that they are not misused or manipulated. To address this, developers implement measures such as content filtering and moderation systems to prevent the spread of inappropriate or harmful content. Additionally, ongoing research and development focus on improving the ability of AI models to understand and respond to user inputs in an ethical and responsible manner. By prioritizing user safety and well-being, the goal is to create AI assistants that are helpful and beneficial without causing harm.