Overview

Introduction to AI and trust

Artificial Intelligence (AI) has become an integral part of our lives, transforming various industries and revolutionizing the way we interact with technology. However, as AI continues to advance, concerns about trust and transparency have emerged. In order to fully embrace the potential of AI, it is crucial to establish trust between humans and AI systems. This article explores the importance of building trust through transparent AI and specifically focuses on the role of ChatGPT in fostering trust in AI-powered conversations.

Importance of transparency in AI

Transparency in AI is of utmost importance as it builds trust between users and AI systems. When AI algorithms and decision-making processes are transparent, users can understand how and why certain outcomes are generated. This helps in ensuring fairness, accountability, and ethical use of AI technologies. Transparent AI also enables users to identify any biases or errors in the system and take necessary steps to rectify them. By promoting transparency in AI, we can foster a culture of trust and collaboration between humans and AI systems.

Overview of ChatGPT

ChatGPT is an advanced AI model developed by OpenAI. It is designed to generate human-like text responses in a conversational manner. The main goal of ChatGPT is to provide an engaging and realistic chat experience for users. It achieves this by utilizing a large dataset of human-generated conversations to learn patterns and generate coherent and contextually relevant responses. The model has been trained to understand and respond to a wide range of topics and queries, making it a versatile tool for various applications such as customer support, content generation, and virtual assistants. With its transparent AI framework, ChatGPT aims to build trust by providing visibility into its limitations and potential biases, ensuring responsible and ethical use of the technology.

Building Trust in AI

Ethical considerations in AI development

Ethical considerations play a crucial role in the development of AI systems. As technology continues to advance, it is important to ensure that AI is developed and deployed in an ethical and responsible manner. Transparency is a key aspect of building trust in AI, as it allows users to understand how the system works and make informed decisions. In the context of ChatGPT, transparency is especially important to address concerns related to bias, privacy, and accountability. By being transparent about the data, algorithms, and decision-making processes behind ChatGPT, we can mitigate potential ethical risks and promote trust among users and stakeholders.

Addressing bias and fairness

Addressing bias and fairness is a crucial aspect of building trust in AI systems like ChatGPT. Bias can occur in AI models due to the data they are trained on, which may reflect societal biases and prejudices. To mitigate bias, OpenAI has implemented a two-step process. First, they use a large and diverse dataset to train ChatGPT, which helps to reduce the impact of any specific biases. Second, OpenAI uses a technique called fine-tuning to further address biases. They fine-tune the model using a narrower dataset that is carefully generated with human reviewers who follow guidelines provided by OpenAI. These guidelines explicitly state that reviewers should not favor any political group and should avoid taking positions on controversial topics. OpenAI also maintains an ongoing relationship with reviewers through weekly meetings to address questions, provide clarifications, and iterate on the model’s guidelines. By taking these steps, OpenAI aims to ensure that ChatGPT is as unbiased and fair as possible, fostering trust and confidence in its AI system.

Ensuring accountability and explainability

Ensuring accountability and explainability are crucial aspects of building trust in AI systems. With ChatGPT, OpenAI has implemented several measures to address these concerns. First, the model’s training data includes demonstrations of correct behavior and is fine-tuned using reinforcement learning from human feedback. This helps in reducing biases and ensuring that the system provides accurate and reliable responses. Second, OpenAI has also developed a moderation system to prevent inappropriate or harmful content from being generated. This helps in maintaining a safe and respectful environment for users. Lastly, OpenAI is committed to soliciting public input on system behavior, deployment policies, and disclosure mechanisms. By involving the wider community, OpenAI aims to ensure that the AI system is aligned with societal values and meets the expectations of its users. Through these efforts, OpenAI strives to build trust in AI by promoting transparency, accountability, and explainability.

Transparency in AI

Openness about data sources and collection

Openness about data sources and collection is crucial in building trust in AI systems. In the case of ChatGPT, transparency is key to ensuring users understand how the model is trained and the data it has been exposed to. By openly sharing information about the sources of data and the methods used for data collection, ChatGPT aims to provide users with a clear understanding of the limitations and potential biases of the system. This transparency allows users to make informed decisions about their interactions with ChatGPT and promotes accountability in the development and deployment of AI technology.

Disclosing model limitations and potential biases

In order to build trust in AI systems, it is crucial to disclose the limitations and potential biases of the models used. By openly acknowledging the shortcomings and biases, we can ensure transparency and accountability in the decision-making process. This allows users to have a better understanding of the AI system’s behavior and make informed decisions based on the provided information. Additionally, disclosing model limitations and potential biases also encourages continuous improvement and refinement of the AI models, leading to more reliable and unbiased outcomes. At ChatGPT, we prioritize the disclosure of model limitations and potential biases to foster trust and promote responsible use of AI technology.

Providing clear explanations for AI decisions

In order to build trust in AI systems, it is essential to provide clear explanations for AI decisions. Transparency is crucial in ensuring that users understand the reasoning behind the decisions made by AI algorithms. By offering clear and concise explanations, users can have a better understanding of how AI systems work and the factors that contribute to the decisions they make. This transparency helps to alleviate concerns about bias, fairness, and accountability in AI. Additionally, clear explanations enable users to identify and address any potential errors or biases in the AI system, leading to continuous improvement and trust-building. Overall, providing clear explanations for AI decisions is a fundamental step in building trust and fostering positive user experiences with AI technology.

Introducing ChatGPT

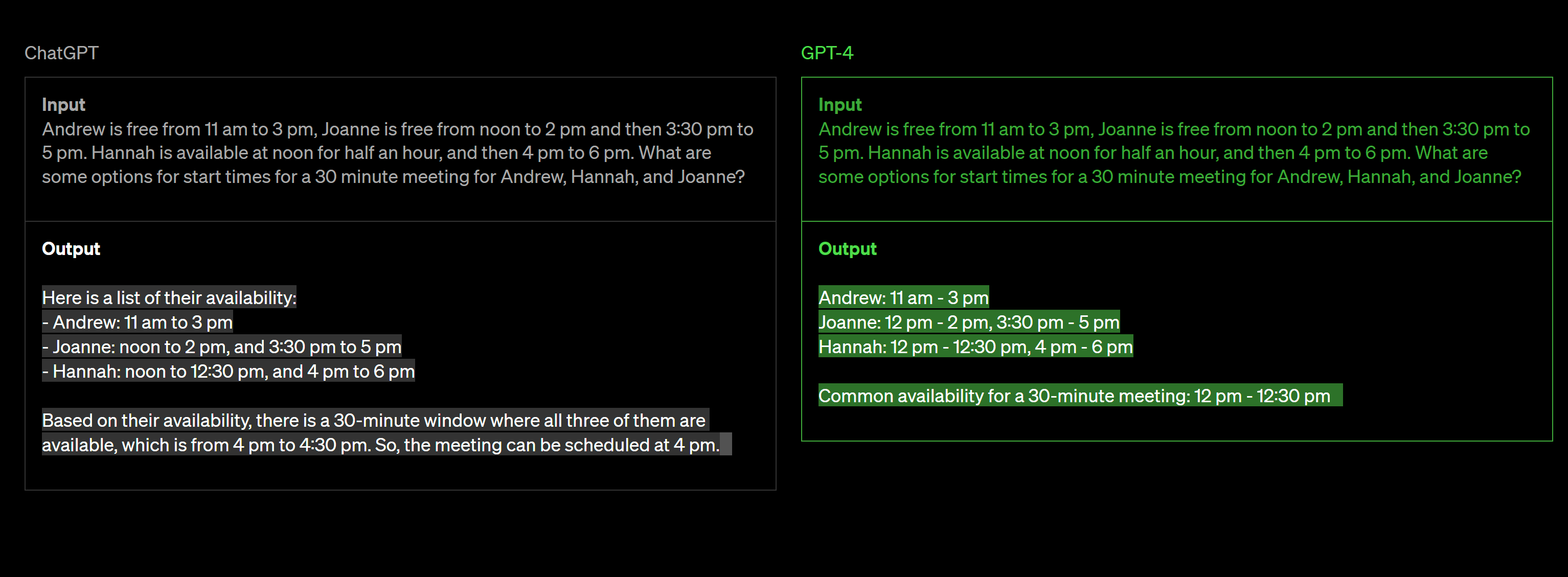

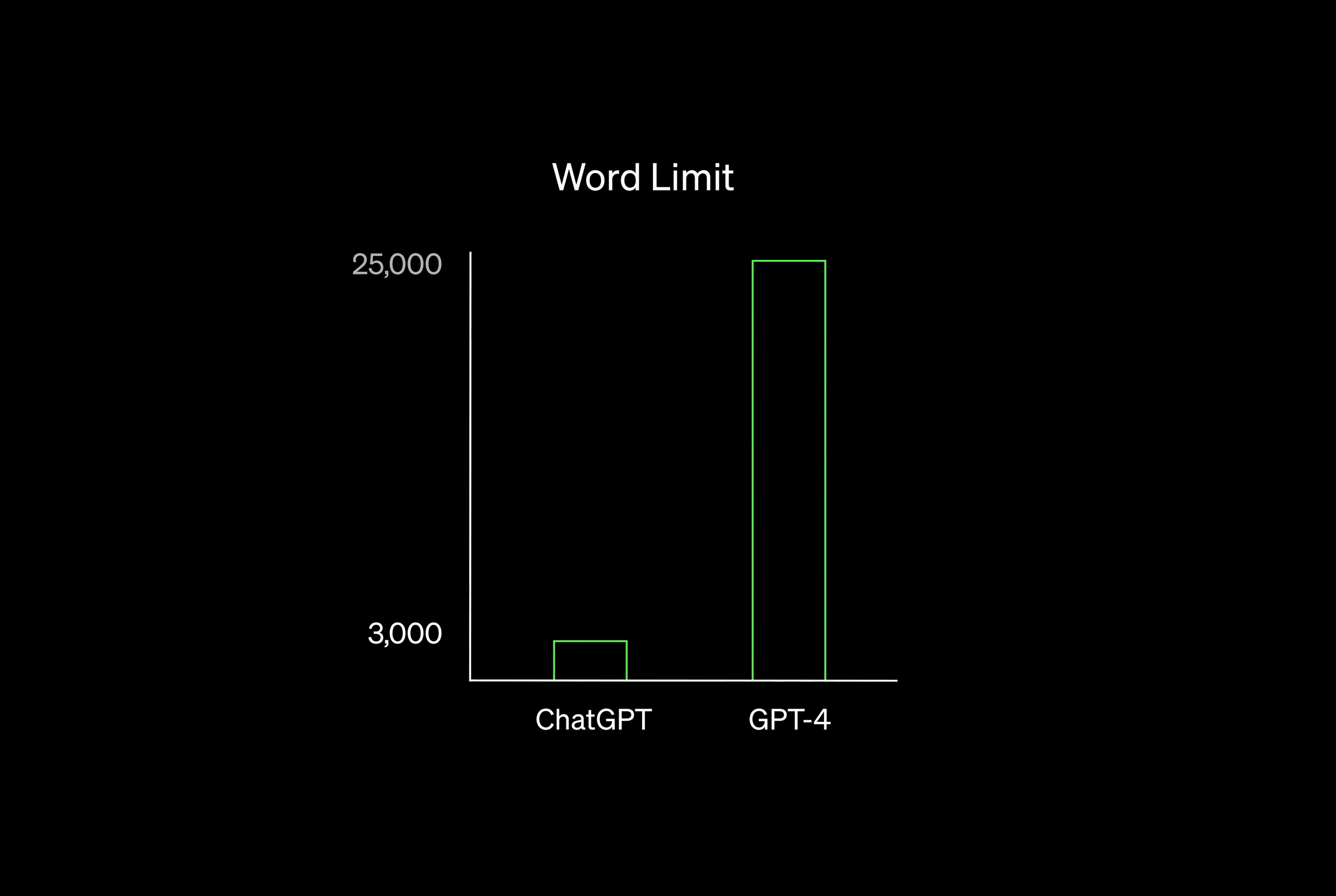

Overview of ChatGPT’s capabilities

ChatGPT is an advanced AI model developed by OpenAI. It is designed to facilitate interactive and dynamic conversations with users. The model has been trained on a vast amount of data from the internet, enabling it to generate human-like responses and understand context. ChatGPT’s capabilities include answering questions, providing recommendations, engaging in small talk, and even assisting with tasks. With its ability to comprehend and respond to a wide range of queries, ChatGPT aims to build trust through transparent and reliable AI interactions.

Training process and data used

The training process and data used in building ChatGPT are crucial for ensuring transparency and trust in its AI capabilities. To train ChatGPT, a large dataset of diverse conversations was used, covering a wide range of topics and scenarios. The data was carefully curated and anonymized to protect user privacy while maintaining the quality and relevance of the training material. The training process involved multiple iterations of fine-tuning and optimization, with the goal of improving the model’s performance and accuracy. By providing insights into the training process and the data used, ChatGPT aims to foster transparency and build trust with its users.

Benefits and limitations of ChatGPT

ChatGPT offers several benefits in terms of enhancing communication and user experience. Firstly, it provides users with a conversational AI system that can generate responses in a human-like manner, making interactions more engaging and natural. Additionally, ChatGPT can assist users in various tasks, such as answering questions, providing recommendations, and offering guidance. This versatility makes it a valuable tool in different domains, including customer support, education, and entertainment. However, it is important to acknowledge the limitations of ChatGPT. Due to its reliance on pre-existing data, it may sometimes provide inaccurate or biased information. Moreover, ChatGPT may occasionally generate responses that are inappropriate or offensive. These limitations highlight the need for continuous improvement and monitoring to ensure the responsible and ethical use of ChatGPT.

Building Trust with ChatGPT

Ensuring user privacy and data protection

In order to ensure user privacy and data protection, ChatGPT implements several measures. First, all user interactions with the AI model are encrypted and stored securely. This ensures that sensitive data shared during conversations remains confidential and protected from unauthorized access. Additionally, ChatGPT adheres to strict data retention policies, deleting user interactions after a certain period of time. This further minimizes the risk of data breaches and unauthorized use of personal information. Furthermore, OpenAI is committed to transparency and accountability when it comes to handling user data. They provide clear guidelines and policies on how user data is collected, used, and shared. By prioritizing user privacy and data protection, ChatGPT aims to build trust and confidence in its AI technology.

Implementing user feedback and iterative improvements

Implementing user feedback and iterative improvements is crucial in building trust through transparent AI. By actively seeking and incorporating user feedback, developers can address any biases or limitations in the AI system. This iterative process allows for continuous improvement and ensures that the AI system evolves to meet the needs and expectations of users. Additionally, transparency in the development process, such as sharing information about the data used and the decision-making algorithms, helps to build trust and confidence in the AI system. By actively involving users in the development and improvement process, AI systems can become more reliable, accurate, and trustworthy.

Addressing concerns of misinformation and harmful content

In order to address concerns of misinformation and harmful content, ChatGPT has implemented several measures. Firstly, the model has been trained on a diverse range of data sources to ensure a comprehensive understanding of various topics. Additionally, OpenAI has put in place a strong content moderation system that actively filters out inappropriate or harmful responses. The model also provides users with the ability to flag any problematic responses, which helps in continuously improving the system. OpenAI is committed to transparency and actively seeks feedback from users to make necessary updates and enhancements to the model. By taking these proactive steps, ChatGPT aims to build trust by mitigating the risks associated with misinformation and harmful content.

Conclusion

Summary of the importance of trust in AI

Trust is a crucial element in the successful adoption and utilization of AI technologies. As AI becomes more prevalent in our daily lives, it is essential to ensure that these systems are transparent and accountable. Transparent AI fosters trust by providing users with a clear understanding of how decisions are made and the reasoning behind them. This transparency helps to alleviate concerns about bias, privacy, and the potential for unethical use of AI. By prioritizing transparency and accountability, we can build trust in AI and empower individuals to confidently embrace these technologies.

The role of transparency in building trust

Transparency plays a crucial role in building trust in AI systems, especially in the case of ChatGPT. By providing visibility into how the AI model works and making the decision-making process understandable, users are more likely to trust the system. When users have a clear understanding of how AI algorithms are trained, they can better evaluate the reliability and accuracy of the system’s outputs. Transparent AI fosters accountability and helps mitigate concerns about bias, privacy, and ethical implications. It allows users to have confidence in the system’s intentions and actions, ultimately strengthening the trust between humans and AI technology.

Future directions for transparent AI development

In order to further advance the development of transparent AI, several future directions can be explored. Firstly, there is a need to enhance the interpretability of AI systems by developing robust and reliable explainability techniques. This would enable users to understand how AI models arrive at their decisions, increasing trust and accountability. Additionally, efforts should be made to improve the fairness and bias mitigation capabilities of AI systems, ensuring that they do not perpetuate existing societal biases. Furthermore, ongoing research and development should focus on addressing the ethical considerations associated with AI, such as privacy concerns and the potential for misuse. By proactively addressing these future directions, we can continue to build trust in AI and harness its full potential for the benefit of society.