Overview

Introduction to ChatGPT

ChatGPT is a state-of-the-art language model developed by OpenAI. It is built upon the GPT (Generative Pre-trained Transformer) architecture, which has been fine-tuned using a large corpus of text from the internet. The primary goal of ChatGPT is to generate human-like responses to user prompts, making it an effective tool for various natural language processing tasks. By leveraging its deep learning capabilities, ChatGPT can understand context, generate coherent and contextually relevant responses, and even unlock creativity in generating text. This article provides a deep dive into the algorithm behind ChatGPT and explores its inner workings.

Key Components of ChatGPT

ChatGPT is powered by a combination of advanced technologies and algorithms that enable it to generate human-like responses. The key components of ChatGPT include transformer-based language models, deep neural networks, and reinforcement learning. The transformer-based language models allow ChatGPT to understand and generate text, while deep neural networks provide the computational power to process large amounts of data. Reinforcement learning helps ChatGPT improve its responses over time through trial and error. These components work together to create a chatbot that can engage in natural and meaningful conversations.

How ChatGPT Works

ChatGPT is powered by a deep learning algorithm called the Transformer. This algorithm is designed to generate human-like responses by predicting the most probable next word given the context of the conversation. The Transformer model consists of multiple layers of self-attention and feed-forward neural networks, allowing it to capture long-range dependencies and context. During training, ChatGPT is fine-tuned using Reinforcement Learning from Human Feedback (RLHF) to improve its responses. The RLHF process involves collecting comparison data where multiple model responses are ranked by quality. The model is then fine-tuned to generate responses that maximize user satisfaction while maximizing efficiency. This approach helps ChatGPT generate coherent and contextually relevant responses in a timely manner.

Training Data

Data Collection

Data collection is a crucial step in training ChatGPT. OpenAI uses a two-step process to gather data for training the model. First, they collect a large dataset of Internet text which serves as the base for training. This dataset contains a wide range of topics and writing styles to ensure the model’s generalization ability. Second, they use human reviewers to review and rate possible model outputs for a set of example inputs. These reviewers follow guidelines provided by OpenAI to ensure consistency and quality. The feedback from reviewers is used to create a dataset of model-written responses along with the corresponding ratings. This dataset is then mixed with the base dataset to fine-tune the model using a process called Reinforcement Learning from Human Feedback (RLHF). The RLHF process involves training a reward model based on the ratings and fine-tuning the model using Proximal Policy Optimization (PPO).

Preprocessing

Before generating responses, ChatGPT preprocesses the input conversation to prepare it for the model. This involves several steps:

- Tokenization: The conversation is split into individual tokens, including words, punctuation, and special characters.

- Special Tokens: ChatGPT adds special tokens to the input, such as the system message and user message tokens.

- Encoding: The tokens are encoded into numerical representations that can be understood by the model.

- Context Window: To provide context to the model, a sliding window of previous conversation history is included in the input.

By performing these preprocessing steps, ChatGPT transforms the conversation into a format that the model can process and generate coherent responses.

Fine-tuning

After the pre-training phase, the ChatGPT model undergoes a process called fine-tuning. During fine-tuning, the model is trained on a specific dataset that is carefully generated with the help of human reviewers. These reviewers follow guidelines provided by OpenAI to review and rate possible model outputs for a range of example inputs. The fine-tuning process helps to improve the model’s performance in generating safe and useful responses. It ensures that the model adheres to ethical guidelines and avoids generating harmful or biased content. Fine-tuning is a crucial step in creating a reliable and trustworthy language model.

Architecture

Transformer Model

The Transformer model is a key component of ChatGPT, which is a state-of-the-art language model developed by OpenAI. It is based on the Transformer architecture, which has revolutionized natural language processing tasks. The Transformer model uses self-attention mechanisms to capture contextual relationships between words in a sentence. This allows it to understand the meaning and context of words in a text and generate coherent and relevant responses. One of the advantages of the Transformer model is its ability to handle long-range dependencies, making it suitable for tasks that require understanding of long and complex sentences. The Transformer model has gained significant popularity due to its impressive performance on various language tasks, and it has been widely adopted in the research and industry communities. Its effectiveness and versatility have made it a popular choice for building advanced language models like ChatGPT.

Encoder-Decoder Structure

The Encoder-Decoder structure is a fundamental component of ChatGPT. It consists of two main parts: the encoder and the decoder. The encoder takes in the input text and processes it into a fixed-length representation called the context vector. This context vector contains the essential information from the input text. The decoder then takes this context vector and generates the output text, word by word. The encoder and decoder are typically implemented using transformer models, which have proven to be effective in various natural language processing tasks. Curious about ChatGPT? Let’s dive deeper into its algorithm!

Attention Mechanism

The attention mechanism is a crucial component in the architecture of ChatGPT. It allows the model to focus on different parts of the input sequence while generating the output. Using ChatGPT, the attention mechanism assigns weights to each token in the input sequence based on its relevance to the current decoding step. These weights are then used to compute a weighted sum of the input tokens, which provides the model with a context vector. This context vector is then used in the generation of the next token. By attending to different parts of the input sequence, the model can capture long-range dependencies and generate coherent and contextually relevant responses. The attention mechanism in ChatGPT is a key factor in its ability to understand and generate human-like text.

Conclusion

Limitations of ChatGPT

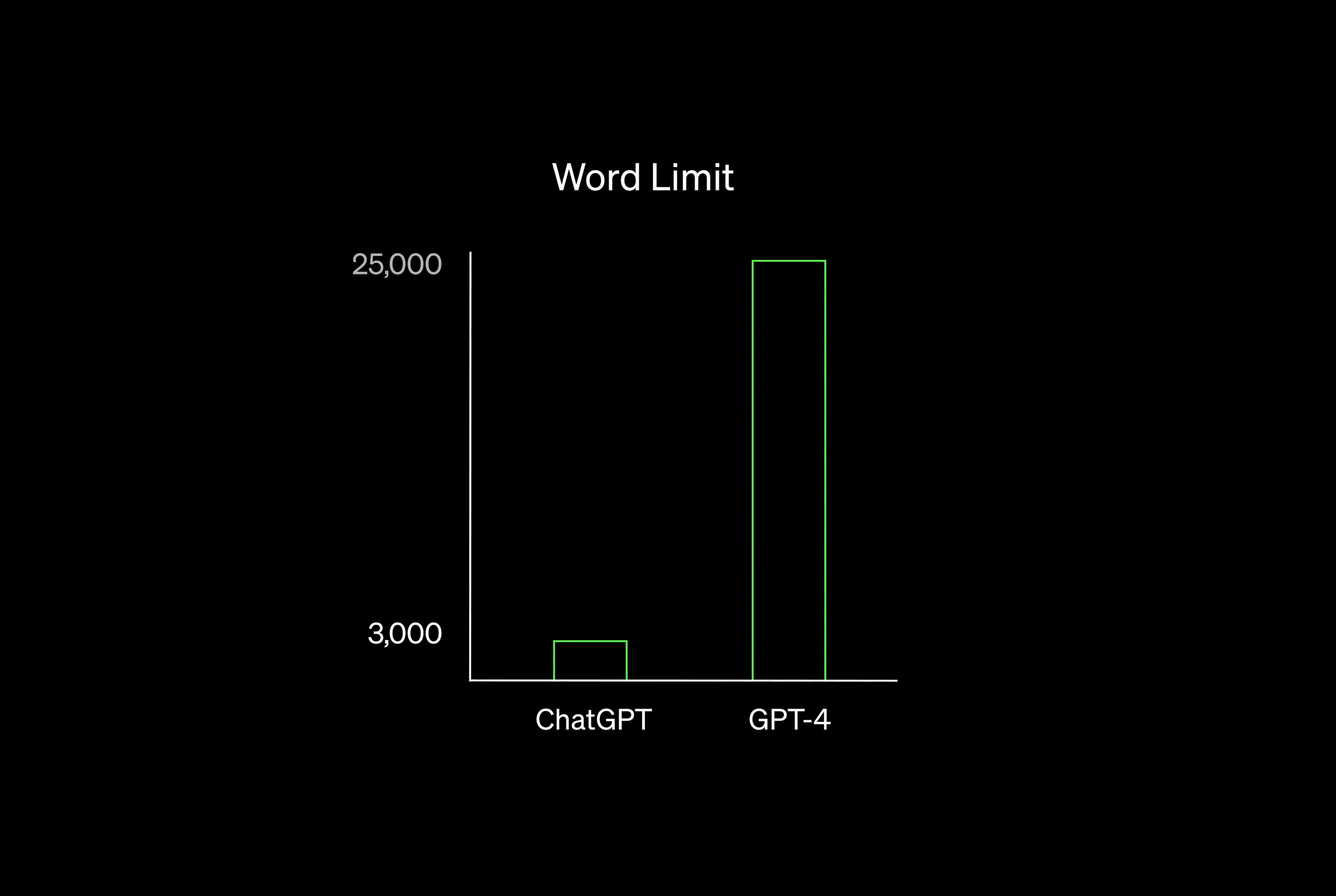

While ChatGPT has shown impressive capabilities in generating text and engaging in interactive conversation, it still has some limitations. One of the main challenges is that it can sometimes produce incorrect or nonsensical responses, especially when faced with ambiguous queries or when asked to provide factual information without sufficient context. Additionally, ChatGPT may exhibit biased behavior or respond to harmful instructions. OpenAI is actively working on addressing these limitations and improving the system’s reliability and safety.

Future Developments

As artificial intelligence continues to advance, there are several exciting future developments in the works for ChatGPT. One area of focus is improving the algorithm’s ability to understand and generate more nuanced and contextually appropriate responses. This involves training the model on larger and more diverse datasets, as well as fine-tuning its parameters to enhance performance. Additionally, efforts are being made to make ChatGPT more interactive and controllable, allowing users to specify desired attributes or styles in their conversations. Another important direction is addressing biases and ensuring ethical considerations are taken into account during the development process. Overall, the future of ChatGPT looks promising, with ongoing research and development pushing the boundaries of what is possible in AI-powered conversational agents.

Impact of ChatGPT

ChatGPT has had a significant impact on various fields, including natural language processing (NLP) and customer support. With its ability to generate coherent and contextually relevant responses, ChatGPT has revolutionized the way conversations are conducted online. The algorithm has been widely adopted by companies across the globe, enabling them to provide efficient and personalized assistance to their customers. For instance, in the customer support domain, ChatGPT has helped companies improve response times and enhance user satisfaction. Moreover, ChatGPT has also been utilized in educational settings to provide interactive and engaging learning experiences. In countries like South Africa, where access to quality education is limited, ChatGPT has played a crucial role in bridging the educational gap by delivering accessible and personalized content to students.