Overview

Introduction to bias in ChatGPT

ChatGPT popularity has skyrocketed in recent years, with millions of users relying on it for various tasks. However, this popularity has also brought attention to the potential biases present in the model. Bias in ChatGPT can manifest in different ways, including gender, racial, and cultural biases. Addressing these biases is crucial to ensure fairness and inclusivity in the outputs generated by ChatGPT.

Impact of bias in AI systems

Bias in AI systems has become a growing concern in recent years. As these systems are trained on large amounts of data, they can inadvertently learn and perpetuate biases present in the data. This can lead to unfair and discriminatory outcomes, affecting various aspects of society. It is crucial to address bias in AI systems to ensure fairness and equity. A comprehensive guide is needed to understand the different types of biases that can arise in AI systems and how to mitigate them effectively.

Importance of addressing bias in ChatGPT

Addressing bias in ChatGPT is of utmost importance as it ensures the fairness and inclusivity of the language model. Bias in AI systems can have significant real-world consequences, perpetuating stereotypes and reinforcing existing inequalities. By actively working to identify and mitigate bias, we can create a more equitable and inclusive AI system that caters to a diverse range of users. It is crucial to consider the relevant concerns and experiences of marginalized communities to build a more inclusive and unbiased ChatGPT.

Understanding Bias in ChatGPT

Types of bias in ChatGPT

ChatGPT can exhibit different types of bias that can affect the quality and fairness of the conversations it generates. These biases can arise from various sources, such as the training data, the model’s architecture, or the fine-tuning process. It is crucial to identify and address these biases to ensure that ChatGPT provides unbiased and inclusive responses to users. Some common types of bias in ChatGPT include stereotyping, offensive language, and gender bias. By understanding and mitigating these biases, we can improve the overall user experience and promote fairness in AI-generated conversations.

Sources of bias in training data

Training data used for text generation models like ChatGPT can contain various sources of bias. Some common sources of bias include:

- Data collection bias: The process of collecting training data may introduce bias if the data is not collected from a diverse set of sources or if certain perspectives are overrepresented.

- Labeling bias: If the training data is labeled by humans, their biases can inadvertently be reflected in the data, leading to biased model outputs.

- Contextual bias: The context in which the training data is generated can introduce bias. For example, if the training data predominantly consists of conversations from a specific demographic, the model may exhibit bias towards that demographic in its responses.

Addressing these sources of bias is crucial to ensure fairness and mitigate potential harm caused by biased outputs.

Unintentional bias in language generation

Language generation models like ChatGPT have made significant progress in generating human-like text. However, these models are not immune to unintentional bias. Capacity challenges arise when training large-scale language models, as they are exposed to vast amounts of data from the internet, which may contain biased or offensive content. This can lead to the generation of biased or discriminatory language by the model without explicit intention. Addressing these biases is crucial to ensure fairness and inclusivity in AI systems.

Addressing Bias in ChatGPT

Improving data collection and preprocessing

To ensure fairness in ChatGPT, it is crucial to streamline AI data collection and preprocessing processes. This involves implementing rigorous guidelines and protocols to minimize bias and address potential sources of unfairness. One approach is to diversify the dataset by including a wide range of perspectives and experiences. Additionally, it is important to carefully review and annotate the data to identify any instances of bias or discriminatory language. By taking these steps, we can improve the quality and fairness of the training data, leading to a more unbiased and inclusive AI model.

Implementing bias detection and mitigation techniques

In order to ensure the fairness of our AI models, we have implemented advanced bias detection and mitigation techniques. These techniques enable us to identify and address potential biases in the responses generated by ChatGPT. By analyzing the training data and monitoring the model’s outputs, we can detect and mitigate biases that may arise from various sources such as AI regulations in South Africa. We have also established a feedback loop with users to gather insights and continuously improve the system’s fairness.

Involving diverse perspectives in model development

To ensure fairness and mitigate bias in the development of ChatGPT, it is crucial to involve diverse perspectives. This includes engaging with individuals from different backgrounds, cultures, and experiences. By incorporating a wide range of viewpoints, we can address potential biases and create a more inclusive and equitable model. Additionally, conducting thorough evaluations and soliciting feedback from diverse users can help identify and rectify any biases that may exist in the model’s responses.

Conclusion

The ongoing challenge of bias in AI systems

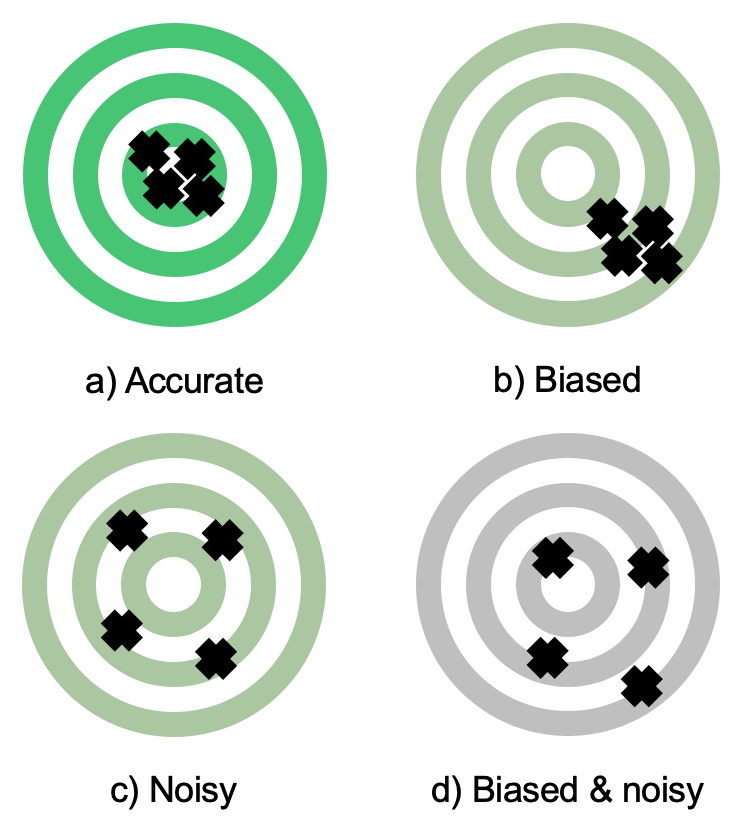

Artificial intelligence (AI) systems have become increasingly prevalent in our society, with applications ranging from autonomous vehicles to virtual assistants. However, one of the ongoing challenges in the development and deployment of AI systems is the presence of bias. Bias in AI refers to the systematic and unfair favoritism or discrimination towards certain individuals or groups based on characteristics such as race, gender, or socioeconomic status. Addressing and mitigating bias in AI systems is crucial to ensure fairness, equity, and inclusivity in their use and impact.

The need for continuous improvement and accountability

To ensure that ChatGPT remains a user-friendly tool, continuous improvement and accountability are crucial. It is important to address any biases that may exist in the model’s responses and ensure that the system treats all users fairly and respectfully. This requires ongoing monitoring and evaluation of the model’s performance, as well as regular updates and refinements to mitigate any potential biases. Additionally, it is essential to establish clear guidelines and standards for the development and deployment of AI systems to promote transparency and accountability.

Creating a more fair and inclusive AI future

As AI technology continues to advance, it is crucial to address the issue of bias in AI systems. Education plays a key role in this process, as it helps to raise awareness about the potential biases that can be embedded in AI algorithms. By educating both developers and users about the importance of fairness, we can work towards creating AI systems that are more fair and inclusive. This can be achieved through initiatives such as training programs, workshops, and educational resources that focus on ethical AI development and usage. Additionally, it is important to foster collaboration between diverse stakeholders, including researchers, policymakers, and community organizations, to ensure that different perspectives are considered in the development and deployment of AI systems.